I am trying to understand the locality level on Spark cluster and its relationship with the RDD number of partitions along with the action perform on it. Specifically, I have a dataframe where the number of partitions are 9647. Then, I performed df.count on it and observed the following in the Spark UI:

A bit of context, I submitted my job to Yarn cluster with the following configuration:

- executor_memory='10g',

- driver_memory='10g',

- num_executors='5',

- executor_cores=5'

Also, I noticed that all the executors were coming from 5 different nodes (hosts).

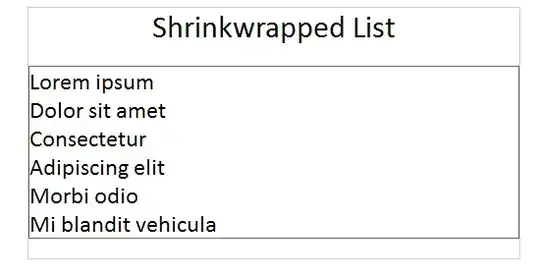

From the figure, I found that from all 9644 tasks, more than 95% were not run within the same node. So, I am just wondering the reason for having having a lot of rack_local. Specifically, why don't the node chose the closest data source to execute, in other words, having more node local?

Thank you