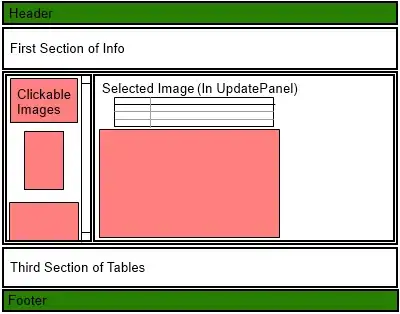

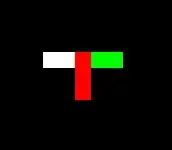

I'm using the OpenCV warpAffine function to do some image processing. The weird thing is that I found after applying a warpAffine and then an inverse warpAffine. The processed image is inconsistent with the original image, where there is one-pixel border padding at the bottom.

img_path = '140028_199844.jpg'

img = cv2.imread(img_path,cv2.IMREAD_COLOR)

plt.imshow(img[:,:,::-1])

h,w,_=img.shape # h=220 w=173

src = np.array([[ 86., 109.5], [ 86. , 0. ], [-23.5, 0. ]])

dst = np.array([[192., 192.], [192. , 0.], [ 0. , 0.]])

trans = cv2.getAffineTransform(np.float32(src), np.float32(dst))

inv_trans = cv2.getAffineTransform(np.float32(dst), np.float32(src))

input = cv2.warpAffine(

img,

trans,

(384, 384),

flags=cv2.INTER_LINEAR,

borderMode=cv2.BORDER_CONSTANT,

borderValue=(0, 0, 0))

plt.imshow(input[:,:,::-1])

output = cv2.warpAffine(

input,

inv_trans,

(w, h),

flags=cv2.INTER_LINEAR,

borderMode=cv2.BORDER_CONSTANT,

borderValue=(0,0,0))

plt.imshow(output[:,:,::-1])

So what is the possible reseason for such problem?