Here's a go at it...

runsByN: For each number, show whether it appears or not in each sublist

list= {{4, 2, 7, 5, 1, 9, 10}, {10, 1, 8, 3, 2, 7}, {9, 2, 7, 3, 6, 4, 5}, {10, 3, 6, 4, 8, 7}, {7}, {3, 1, 8, 2, 4, 7, 10, 6}, {7, 6}, {10, 2, 8, 5, 6, 9, 7, 3}, {1, 4, 8}, {5, 6, 1}, {3, 2, 1}, {10,6, 4}, {10, 7, 3}, {10, 2, 4}, {1, 3, 5, 9, 7, 4, 2, 8}, {7, 1, 3}, {5, 7, 1, 10, 2, 3, 6, 8}, {10, 8, 3, 6, 9, 4, 5, 7}, {3, 10, 5}, {1}, {7, 9, 1, 6, 2, 4}, {9, 7, 6, 2}, {5, 6, 9, 7}, {1, 5}, {1,9, 7, 5, 4}, {5, 4, 9, 3, 1, 7, 6, 8}, {6}, {10}, {6}, {7, 9}};

runsByN = Transpose[Table[If[MemberQ[#, n], n, 0], {n, Max[list]}] & /@ list]

Out = {{1, 1, 0, 0, 0, 1, 0, 0, 1, 1, 1, 0, 0, 0, 1, 1, 1, 0, 0, 1, 1, 0, 0,1, 1, 1, 0, 0, 0, 0}, {2, 2, 2, 0, 0, 2, 0, 2, 0, 0, 2, 0, 0, 2, 2,0, 2, 0, 0, 0, 2, 2, 0, 0, 0, 0, 0, 0, 0, 0}, {0, 3, 3, 3, 0, 3, 0,3, 0, 0, 3, 0, 3, 0, 3, 3, 3, 3, 3, 0, 0, 0, 0, 0, 0, 3, 0, 0, 0,0}, {4, 0, 4, 4, 0, 4, 0, 0, 4, 0, 0, 4, 0, 4, 4, 0, 0, 4, 0, 0, 4, 0, 0, 0, 4, 4, 0, 0, 0, 0}, {5, 0, 5, 0, 0, 0, 0, 5, 0, 5, 0, 0, 0, 0, 5, 0, 5, 5, 5, 0, 0, 0, 5, 5, 5, 5, 0, 0, 0, 0}, {0, 0, 6, 6, 0, 6, 6, 6, 0, 6, 0, 6, 0, 0, 0, 0, 6, 6, 0, 0, 6, 6, 6, 0, 0, 6, 6, 0,6, 0}, {7, 7, 7, 7, 7, 7, 7, 7, 0, 0, 0, 0, 7, 0, 7, 7, 7, 7, 0, 0, 7, 7, 7, 0, 7, 7, 0, 0, 0, 7}, {0, 8, 0, 8, 0, 8, 0, 8, 8, 0, 0, 0, 0, 0, 8, 0, 8, 8, 0, 0, 0, 0, 0, 0, 0, 8, 0, 0, 0, 0}, {9, 0, 9, 0, 0, 0, 0, 9, 0, 0, 0, 0, 0, 0, 9, 0, 0, 9, 0, 0, 9, 9, 9, 0, 9, 9, 0, 0, 0, 9}, {10, 10, 0, 10, 0, 10, 0, 10, 0, 0, 0, 10, 10, 10, 0, 0, 10, 10, 10, 0, 0, 0, 0, 0, 0, 0, 0, 10, 0, 0}};

runsByN is list transposed, with zeros inserted to represent missing numbers. It shows the sublists in which 1, 2, 3, and 4 appeared.

myPick: Picking numbers that constitute an optimal path

myPick recursively builds a list of the longest runs. It doesn't look for all optimal solutions, but rather the first solution of minimal length.

myPick[{}, c_] := Flatten[c]

myPick[l_, c_: {}] :=

Module[{r = Length /@ (l /. {x___, 0, ___} :> {x}), m}, m = Max[r];

myPick[Cases[(Drop[#, m]) & /@ l, Except[{}]],

Append[c, Table[Position[r, m, 1, 1][[1, 1]], {m}]]]]

choices = myPick[runsByN]

(* Out= {7, 7, 7, 7, 7, 7, 7, 7, 1, 1, 1, 10, 10, 10, 3, 3, 3, 3, 3, 1, 1, 6, 6, 1, 1, 1, 6, 10, 6, 7} *)

Thanks to Mr.Wizard for suggesting the use of a replacement rule as an efficient alternative to TakeWhile.

Epilog:Visualizing the solution path

runsPlot[choices1_, runsN_] :=

Module[{runs = {First[#], Length[#]} & /@ Split[choices1], myArrow,

m = Max[runsN]},

myArrow[runs1_] :=

Module[{data1 = Reverse@First[runs1], data2 = Reverse[runs1[[2]]],

deltaX},

deltaX := data2[[1]] - 1;

myA[{}, _, out_] := out;

myA[inL_, deltaX_, outL_] :=

Module[{data3 = outL[[-1, 1, 2]]},

myA[Drop[inL, 1], inL[[1, 2]] - 1,

Append[outL, Arrow[{{First[data3] + deltaX,

data3[[2]]}, {First[data3] + deltaX + 1, inL[[1, 1]]}}]]]];

myA[Drop[runs1, 2], deltaX, {Thickness[.005],

Arrow[{data1, {First[data1] + 1, data2[[2]]}}]}]];

ListPlot[runsN,

Epilog -> myArrow[runs],

PlotStyle -> PointSize[Large],

Frame -> True,

PlotRange -> {{1, Length[choices1]}, {1, m}},

FrameTicks -> {All, Range[m]},

PlotRangePadding -> .5,

FrameLabel -> {"Sublist", "Number", "Sublist", "Number"},

GridLines :> {FoldList[Plus, 0, Length /@ Split[choices1]], None}

]];

runsPlot[choices, runsByN]

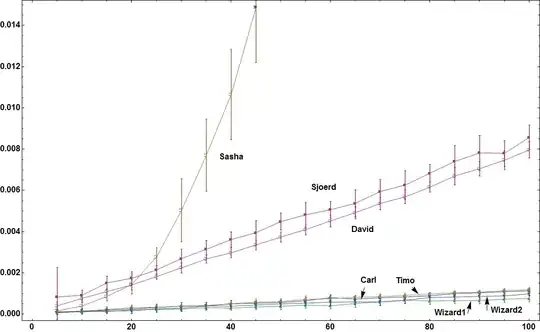

The chart below represents the data from list.

Each plotted point corresponds to a number and the sublist in which it occurred.