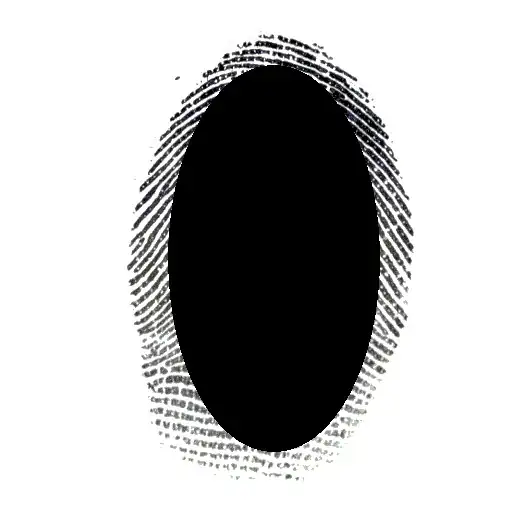

Here's an approach based on the assumption that the majority of the text is skewed onto one side. The idea is that we can determine the angle based on the where the major text region is located

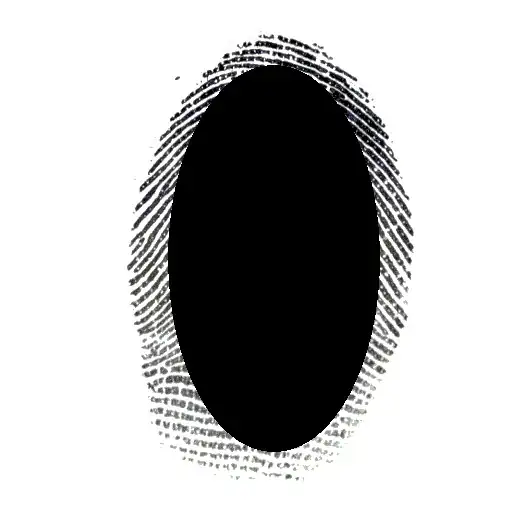

After converting to grayscale and Gaussian blurring, we adaptive threshold to obtain a binary image

From here we find contours and filter using contour area to remove the small noise particles and the large border. We draw any contours that pass this filter onto a mask

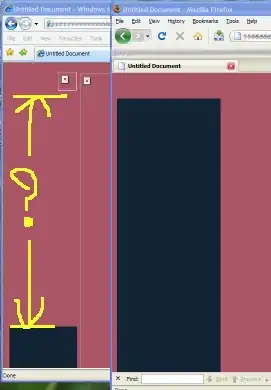

To determine the angle, we split the image in half based on the image's dimension. If width > height then it must be a horizontal image so we split in half vertically. if height > width then it must be a vertical image so we split in half horizontally

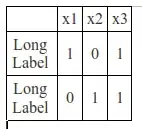

Now that we have two halves, we can use cv2.countNonZero() to determine the amount of white pixels on each half. Here's the logic to determine angle:

if horizontal

if left >= right

degree -> 0

else

degree -> 180

if vertical

if top >= bottom

degree -> 270

else

degree -> 90

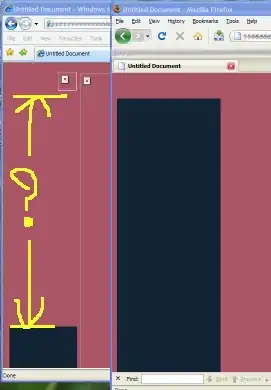

left 9703

right 3975

Therefore the image is 0 degrees. Here's the results from other orientations

left 3975

right 9703

We can conclude that the image is flipped 180 degrees

Here's results for vertical image. Note since its a vertical image, we split horizontally

top 3947

bottom 9550

Therefore the result is 90 degrees

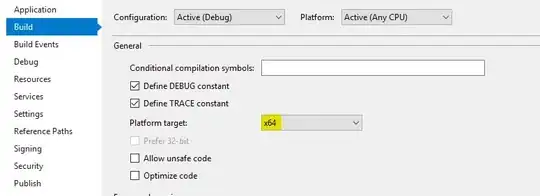

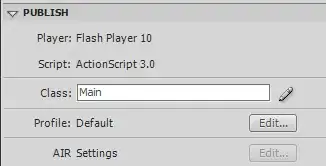

import cv2

import numpy as np

def detect_angle(image):

mask = np.zeros(image.shape, dtype=np.uint8)

gray = cv2.cvtColor(image, cv2.COLOR_BGR2GRAY)

blur = cv2.GaussianBlur(gray, (3,3), 0)

adaptive = cv2.adaptiveThreshold(blur,255,cv2.ADAPTIVE_THRESH_GAUSSIAN_C, cv2.THRESH_BINARY_INV,15,4)

cnts = cv2.findContours(adaptive, cv2.RETR_TREE, cv2.CHAIN_APPROX_SIMPLE)

cnts = cnts[0] if len(cnts) == 2 else cnts[1]

for c in cnts:

area = cv2.contourArea(c)

if area < 45000 and area > 20:

cv2.drawContours(mask, [c], -1, (255,255,255), -1)

mask = cv2.cvtColor(mask, cv2.COLOR_BGR2GRAY)

h, w = mask.shape

# Horizontal

if w > h:

left = mask[0:h, 0:0+w//2]

right = mask[0:h, w//2:]

left_pixels = cv2.countNonZero(left)

right_pixels = cv2.countNonZero(right)

return 0 if left_pixels >= right_pixels else 180

# Vertical

else:

top = mask[0:h//2, 0:w]

bottom = mask[h//2:, 0:w]

top_pixels = cv2.countNonZero(top)

bottom_pixels = cv2.countNonZero(bottom)

return 90 if bottom_pixels >= top_pixels else 270

if __name__ == '__main__':

image = cv2.imread('1.png')

angle = detect_angle(image)

print(angle)