So this post is a two-part question. I am testing out the Google app engine with instance class F4 (Memory: 1024 MB and CPU 2.4GHz with automatic scaling). This is the configuration file found on App engine:

runtime: nodejs10

env: standard

instance_class: F4

handlers:

- url: .*

script: auto

automatic_scaling:

min_idle_instances: automatic

max_idle_instances: automatic

min_pending_latency: automatic

max_pending_latency: automatic

network: {}

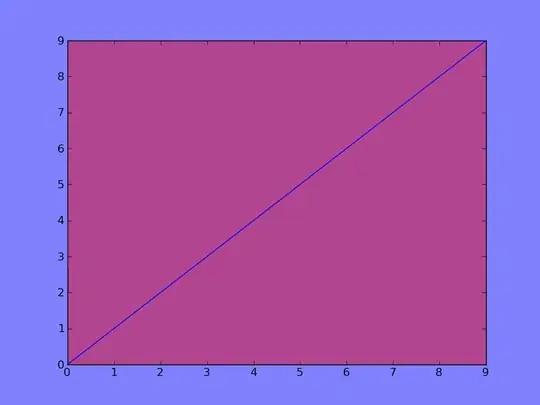

My Nodejs server has normal Post and Get routes that do no heavy lifting aside from serving static files and fetching data from a MongoDB. This is the memory usage graph when using these routes:

Fig 1.Graph showing memory usage as seen on Google StackDriver while navigating from these non-heavy routes

Fig 1.Graph showing memory usage as seen on Google StackDriver while navigating from these non-heavy routes

My first question is, why does it require so much ram (around 500mb) for such small tasks?

I am running profiling tests on my Windows 10 machine by using the Chrome Devtools Node.js Memory Profiler and its giving nowhere near these numbers, I am getting around 52MBs when taking Heap Snapshots.

My second question is about a specific route that takes an uploaded file and resizes it into 3 different images using sharp.

Why is Memory not being freed when the resizing is complete despite working as expected on my Windows 10 machine?

I am doing the resizing test on a 10mb image which is converted into a buffer and then stored in memory. The conversion code is as follows:

const resizeAndSave = (buffer, filePath, widthResize, transform) => {

const { width, height, x, y } = transform;

//create sharp instance

const image = sharp(buffer);

//prepare to upload to Cloud storage

const file = publicBucket.file(filePath);

return new Promise((resolve, reject) => {

image

.metadata()

.then(metadata => {

//do image operations

const img = image

.extract({

width: isEmpty(transform) ? metadata.width : parseInt(width),

height: isEmpty(transform) ? metadata.height : parseInt(height),

left: isEmpty(transform) ? 0 : parseInt(x),

top: isEmpty(transform) ? 0 : parseInt(y)

})

.resize(

metadata.width > widthResize

? {

width: widthResize || metadata.width,

withoutEnlargement: true

}

: metadata.height > widthResize

? {

height: widthResize || metadata.height,

withoutEnlargement: true

}

: {

width: widthResize || metadata.width,

withoutEnlargement: true

}

)

.jpeg({

quality: 40

});

//pipe to cloud storage and resolve filepath when done

img

.pipe(file.createWriteStream({ gzip: true }))

.on("error", function(err) {

reject(err);

})

.on("finish", function() {

// The file upload is complete.

resolve(filePath);

});

})

.catch(err => {

reject(err);

});

});

};

This function is called three times in series for each image, Promise.all was not used to prevent them from running in parallel for the sake of the test:

async function createAvatar(identifier, buffer, transform, done) {

const dir = `uploads/${identifier}/avatar`;

const imageId = nanoid_(20);

const thumbName = `thumb_${imageId}.jpeg`;

await resizeAndSave(buffer, `${dir}/${thumbName}`, 100, transform);

const mediumName = `medium_${imageId}.jpeg`;

await resizeAndSave(buffer, `${dir}/${mediumName}`, 400, transform);

const originalName = `original_${imageId}.jpeg`;

await resizeAndSave(buffer, `${dir}/${originalName}`, 1080, {});

done(null, {

thumbUrl: `https://bucket.storage.googleapis.com/${dir_}/${thumbName}`,

mediumUrl: `https://bucket.storage.googleapis.com/${dir_}/${mediumName}`,

originalUrl: `https://bucket.storage.googleapis.com/${dir_}/${originalName}`

});

/* Promise.all([thumb])

.then(values => {

done(null, {

thumbUrl: `https://bucket.storage.googleapis.com/${dir_}/${thumbName}`,

mediumUrl: `https://bucket.storage.googleapis.com/${dir_}/${mediumName}`,

originalUrl: `https://bucket.storage.googleapis.com/${dir_}/${originalName}`

});

})

.catch(err => {

done(err, null);

}); */

}

The heap snapshots when running the server on my Window 10 machine are :

Fig 2.Heap snapshots from Chrome devtools for Node.js when navigating to the image resizing route once

These heap snapshots clearly show that the used memory to store the 10mb image in memory and resize it is being returned to the os on My machine.

The memory usage reported on StackDriver is:

Fig 3.Memory usage on Google StackDriver when navigating to the image resizing route once

Fig 3.Memory usage on Google StackDriver when navigating to the image resizing route once

This clearly shows that the memory is not being freed when the operation is done, and also is very high, starting from around 8 pm, it goes up to 800mb and never drops.

I have also tried the Stackdriver profiler but it doesn't show any high memory usage but in fact, it shows around 55mb which is close to my windows machine:

Fig 4.StackDriver profiler heap snapshot

Fig 4.StackDriver profiler heap snapshot

So if my analysis is correct I am assuming it has something to do with the os the instances in app engine are running on? I have no clue.

Update: this is the latest memory usage obtained from Stackdriver after using the image processing route and not touching the app for an hour:

Fig 5.Memory usage while leaving the app idle for an hour after navigating to the image resize route

Fig 5.Memory usage while leaving the app idle for an hour after navigating to the image resize route

Update 2: According to Travis suggestion, I looked into the process while navigating to the route and found that the memory usage is slightly higher than the heap but is nowhere near what app engine is showing:

Fig 6.Windows 10 process Nodejs memory while image processign is running

Fig 6.Windows 10 process Nodejs memory while image processign is running

Update 3: The number of Instances being used at the same time interval as figure 5 (while memory usage is high):

Fig 7.Number of instances used at the same time interval as figure 5

Fig 7.Number of instances used at the same time interval as figure 5

Update 4: So I tried switching to instance class F1 (256 MB 600 MHz) to see what happens. The results show reduced ram usage when idle but app engine sends a warning saying to upgrade ram when I process the 10mb image. (It shows 2 instances currenltly running).

Fig 8.F1 instance class with 256MB ram when app is idle

Fig 8.F1 instance class with 256MB ram when app is idle

This leads me to think that the instances try to occuppy most of the available ram anyway.