I have a fairly large DataFrame, say 600 indexes, and want to use filter criteria to produce a reduced version of the DataFrame where the criteria is true. From the research I've done, filtering works well when you're applying expressions to the data, and already know the index you're operating on. What I want to do, however, is apply the filtering criteria to the index. See example below.

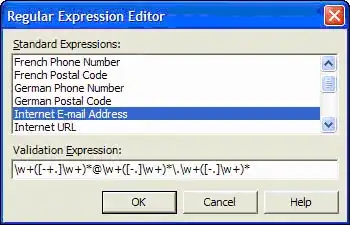

MultiIndex is bold, names of MultiIndex names are italic.

I'd like to apply the criteria like follows (or something) along these lines:

df = df[MultiIndex.query('base == 115 & Al.isin(stn)')]

Then maybe do something like this:

df = df.transpose()[MultiIndex.query('Fault.isin(cont)')].transpose

To result in:

I think fundamentally I'm trying to produce a boolean list to mask the MultiIndex. If there is a quick way to apply the pandas query to a 2d list? that would be acceptable. As of now it seems like an option would be to take the MultiIndex, convert it to a DataFrame, then I can apply filtering as I want to get the TF array. I'm concerned that this will be slow though.