This kind of question is recurring and should be answered more clearly than "MATLAB uses highly optimized libraries" or "MATLAB uses the MKL" for once on Stack Overflow.

History:

Matrix multiplication (together with Matrix-vector, vector-vector multiplication and many of the matrix decompositions) is (are) the most important problems in linear algebra. Engineers have been solving these problems with computers since the early days.

I'm not an expert on the history, but apparently back then, everybody just rewrote his FORTRAN version with simple loops. Some standardization then came along, with the identification of "kernels" (basic routines) that most linear algebra problems needed in order to be solved. These basic operations were then standardized in a specification called: Basic Linear Algebra Subprograms (BLAS). Engineers could then call these standard, well-tested BLAS routines in their code, making their work much easier.

BLAS:

BLAS evolved from level 1 (the first version which defined scalar-vector and vector-vector operations) to level 2 (vector-matrix operations) to level 3 (matrix-matrix operations), and provided more and more "kernels" so standardized more and more of the fundamental linear algebra operations. The original FORTRAN 77 implementations are still available on Netlib's website.

Towards better performance:

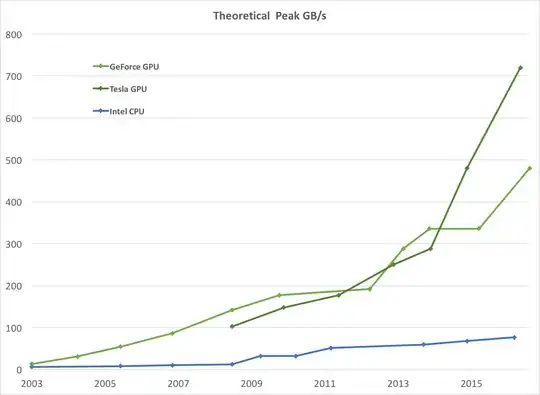

So over the years (notably between the BLAS level 1 and level 2 releases: early 80s), hardware changed, with the advent of vector operations and cache hierarchies. These evolutions made it possible to increase the performance of the BLAS subroutines substantially. Different vendors then came along with their implementation of BLAS routines which were more and more efficient.

I don't know all the historical implementations (I was not born or a kid back then), but two of the most notable ones came out in the early 2000s: the Intel MKL and GotoBLAS. Your Matlab uses the Intel MKL, which is a very good, optimized BLAS, and that explains the great performance you see.

Technical details on Matrix multiplication:

So why is Matlab (the MKL) so fast at dgemm (double-precision general matrix-matrix multiplication)? In simple terms: because it uses vectorization and good caching of data. In more complex terms: see the article provided by Jonathan Moore.

Basically, when you perform your multiplication in the C++ code you provided, you are not at all cache-friendly. Since I suspect you created an array of pointers to row arrays, your accesses in your inner loop to the k-th column of "matice2": matice2[m][k] are very slow. Indeed, when you access matice2[0][k], you must get the k-th element of the array 0 of your matrix. Then in the next iteration, you must access matice2[1][k], which is the k-th element of another array (the array 1). Then in the next iteration you access yet another array, and so on... Since the entire matrix matice2 can't fit in the highest caches (it's 8*1024*1024 bytes large), the program must fetch the desired element from main memory, losing a lot of time.

If you just transposed the matrix, so that accesses would be in contiguous memory addresses, your code would already run much faster because now the compiler can load entire rows in the cache at the same time. Just try this modified version:

timer.start();

float temp = 0;

//transpose matice2

for (int p = 0; p < rozmer; p++)

{

for (int q = 0; q < rozmer; q++)

{

tempmat[p][q] = matice2[q][p];

}

}

for(int j = 0; j < rozmer; j++)

{

for (int k = 0; k < rozmer; k++)

{

temp = 0;

for (int m = 0; m < rozmer; m++)

{

temp = temp + matice1[j][m] * tempmat[k][m];

}

matice3[j][k] = temp;

}

}

timer.stop();

So you can see how just cache locality increased your code's performance quite substantially. Now real dgemm implementations exploit that to a very extensive level: They perform the multiplication on blocks of the matrix defined by the size of the TLB (Translation lookaside buffer, long story short: what can effectively be cached), so that they stream to the processor exactly the amount of data it can process. The other aspect is vectorization, they use the processor's vectorized instructions for optimal instruction throughput, which you can't really do from your cross-platform C++ code.

Finally, people claiming that it's because of Strassen's or Coppersmith–Winograd algorithm are wrong, both these algorithms are not implementable in practice, because of hardware considerations mentioned above.