Recently, I implemented parallelisation in my MATLAB program, much to the suggestions offered in Slow xlsread in MATLAB. However, implementing the parallelism has cropped up another problem - non-linearly increasing processing time with increasing scale.

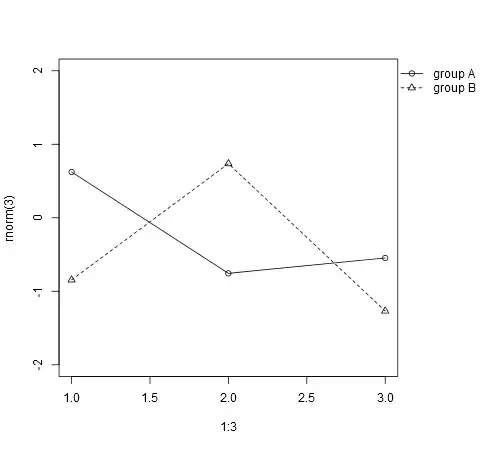

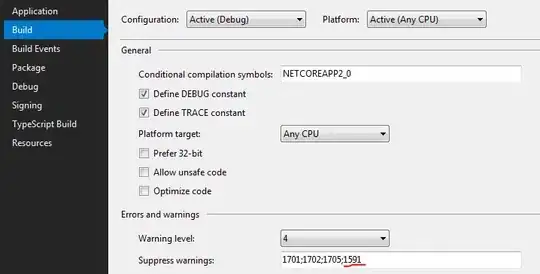

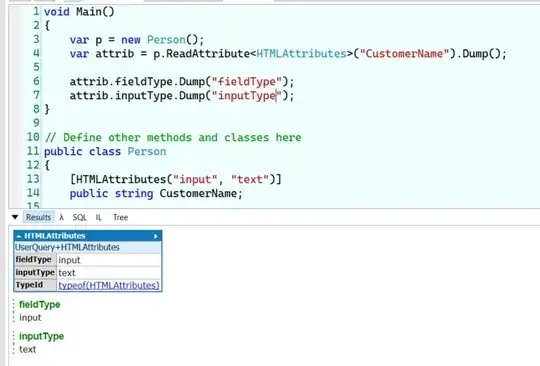

The culprit seems to be the java.util.concurrent.LinkedBlockingQueue method as can be seen from the attached images of profiler and the corresponding condensed graphs.

Problem: How do I remove this non-linearity as my work involves processing more than 1000 sheets in single run - which would take an insanely long time?

Note: The parallelised part of the program involves just reading all the .xls files and storing them in matrices, after which I start the remainder of my program. dlmwrite is used towards the end of the program and optimization on its time is not really required, although could also be suggested.

Culprit:

Code being parallelised:

parfor i = 1:runs

sin = 'Sheet';

sno = num2str(i);

sna = strcat(sin, sno);

data(i, :, :) = xlsread('Processes.xls', sna, '' , 'basic');

end