[Please dont't forget to read EDIT 1, EDIT 2, EDIT 3, EDIT 4 and EDIT 5]

original question:

I am following https://outerra.blogspot.com/2013/07/logarithmic-depth-buffer-optimizations.html and https://outerra.blogspot.com/2012/11/maximizing-depth-buffer-range-and.html to enable logarithmic depth buffer in opengl.

environment:

win10, glad/glfw, assimp(importing the satellite .obj model).

when using normal depth buffer, everything is correct.

but when i tried to add a logarithmic depth buffer in vertex and fragment shader, everything went wrong.

the satellites appear to have only frames left.

the near and far plane are defined as:

double NearPlane = 0.01;

double FarPlane = 5.0 * 1e8 * SceneScale;

with

#define SceneScale 0.001 * 0.001

here is my vertex shader:

#version 410 core

layout (location = 0) in vec3 position;

layout (location = 1) in vec3 aNormal;

layout (location = 2) in vec2 aTexCoord;

// layout (location = 3) in float near; // fixed in EDIT 3

// layout (location = 4) in float far;

uniform mat4 model;

uniform mat4 view;

uniform mat4 projection;

uniform float near; // added in EDIT 3

uniform float far; // added in EDIT 3

layout (location = 0) out vec2 texCoord;

layout (location = 1) out vec3 Normal;

layout (location = 2) out vec3 FragPos;

layout (location = 3) out float logz;

void main()

{

texCoord = aTexCoord;

gl_Position = projection * view * model * vec4(position, 1.0f);

// log depth buffer

float Fcoef = 2.0 / log2(far + 1.0);

float flogz = 1.0 + gl_Position.w;

gl_Position.z = log2(max(1e-6, flogz)) * Fcoef - 1.0;

gl_Position.z *= gl_Position.w; // fixed in EDIT 2

logz = log2(flogz) * Fcoef * 0.5;

FragPos = vec3(model * vec4(position, 1.0));

Normal = mat3(transpose(inverse(model))) * aNormal;

}

fragment shader:

#version 410 core

out vec4 color;

layout (location = 0) in vec2 texCoord;

layout (location = 1) in vec3 Normal;

layout (location = 2) in vec3 FragPos;

layout (location = 3) in float logz;

uniform sampler2D ourTexture;

uniform vec3 lightPosition;

uniform vec3 lightColor;

void main()

{

gl_FragDepth = logz; // log depth buffer

float ambientStrength = 0.1;

vec3 ambient = ambientStrength * lightColor;

vec3 texColor = texture(ourTexture, texCoord).rgb;

vec3 norm = normalize(Normal);

vec3 lightDir = normalize(lightPosition - FragPos);

float diff = max(dot(norm, lightDir), 0.0);

vec3 diffuse = diff * lightColor;

vec3 result = (ambient + diffuse) * texColor;

color = vec4(result, 1.0f);

}

So anyone knows where is the wrong place? Many thanks.

EDIT 1

by setting

glEnable(GL_DEPTH_CLAMP);

I got another incorrectly rendered scene different from above.

EDIT 2

I think I've lost an important line, though adding the line didn't give me a correctly rendered scene:

gl_Position.z *= gl_Position.w;

and the scene is as follows without glEnable(GL_DEPTH_CLAMP)

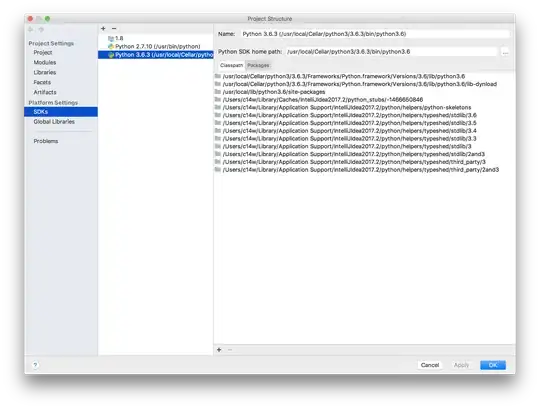

EDIT 3 (partly solved)

I found that I made another mistake by writing:

layout (location = 3) in float near;

layout (location = 4) in float far;

actually it should be uniform values!

uniform float near;

uniform float far;

and everything went ok now under small scale.

But there are still problems when I tried to make the scene to a real scale, thus making

#define SceneScale 1.0

which will let the earth radius = 6378137.0m, and satellites to be a box like 1m1m1m. When rendering the earth and moon looks ok, but the two satellites seems to tore apart.

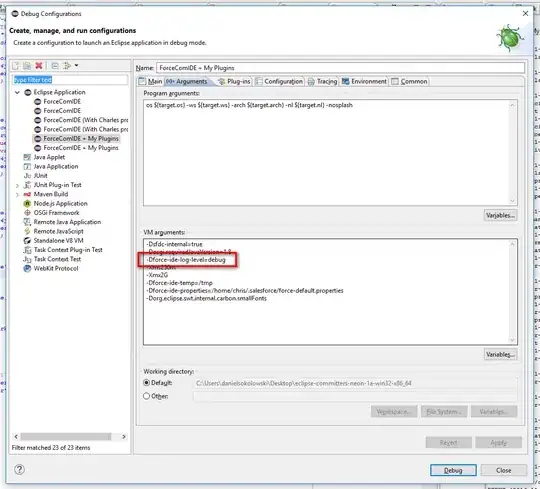

EDIT 4 (solved)

the remained problem may related to JITTER, and can be possibly solved by using Relative to center (RTC) rendering method, which can be found here

Updated methods like RTE (relative to eye) can further optimize the rendering. And I'm still working on it.

UPDATE: solved it by the following codes:

glm::dmat4 model_sate1(1.0);

model_sate1 = glm::translate(model_sate1, Sate1Pos);

model_sate1 = glm::rotate(model_sate1, EA[0].Yaw, glm::dvec3(0.0, 0.0, 1.0));

model_sate1 = glm::rotate(model_sate1, EA[0].Pitch, glm::dvec3(0.0, 1.0, 0.0));

model_sate1 = glm::rotate(model_sate1, EA[0].Roll, glm::dvec3(1.0, 0.0, 0.0));

SateShader.setMat4("model", model_sate1);

SateShader.setMat4("view", view);

SateShader.setMat4("projection", projection);

modelview = view * model_sate1;

glm::dvec3 Sate1PosV = glm::dvec3(modelview * glm::dvec4(Sate1Pos, 1.0));

modelview[0].w = Sate1PosV.x; // rtc here

modelview[1].w = Sate1PosV.y;

modelview[2].w = Sate1PosV.z;

SateShader.setMat4("ModelViewMat", modelview);

Sate1.Draw(SateShader);

and in vertex shader:

gl_Position = projection * ModelViewMat * vec4(position, 1.0f);

By applying RTC method I was able to render the scene of real size. However when the satellites start to move driven by another program, there is no jitter, but they begin to blink (pop up and disappear) under the frequency of the data refreshing rate.

I guess

- the satellite flies too fast in the real-scale scene.

- the render of the satellite model of such rapid movement require a period of time that cannot be ingored.

EDIT 5 (dealing with the new blink problem)

I found out what causes the problem in the following link

and I solved it. See the comments of the link.

UPDATE: this problem seems to be hardware-related. I didn't see the problem happen in my two desktop computers, but it happened in my laptop.