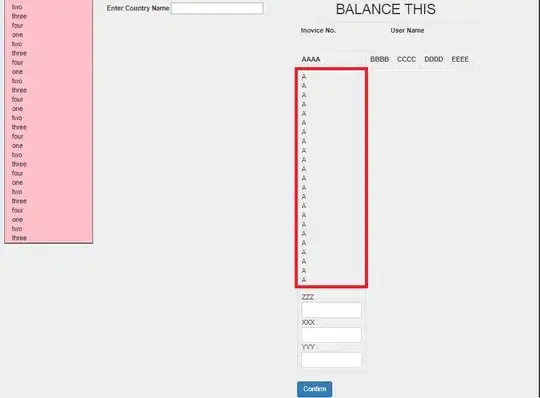

I'm trying to build an image classification model based on features extracted from GLCM. I want to mask some of the images to improve the model, and of course I don't want the GLCM to take those pixels in account. based on the following post I've implemented and conducted a test to make sure that GLCM works correctly for masked images:

1) Take an image and create a cropped version and a masked version(in the same pixels that were cropped).

2) converte images to int32 type and did the following:

#adding 1 to all pixels and setting masked pixels as zero.

mask_img+=1

crop_img+=1

mask_img[:,:,2][:,int(img.shape[1]/2):int(img.shape[1])] = 0

glcm_crop = greycomatrix(crop_img[:,:,2],

levels=257,

distances=1,

angles=0,

symmetric=True,

normed=True)

glcm_masked = greycomatrix(mask_img[:,:,2],

levels=257,

distances=1,

angles=0,

symmetric=True,

normed=True)

#discarding the first row and column that represent zero value pixels

glcm_masked =glcm_masked[1:, 1:, :, :]

glcm_crop = glcm_crop[1:, 1:, :, :]

So in this test, if that GLCM wasn't effected by masked pixels, I would expected that it will be the same matrix for both masked and cropped images. but in reality the matrices were diffrent.

Is my understanding of how GLCM works correct? Does it make sense in theory that those two matrices should be equal?