I am working with timeseries data collected from a sensor at 5min intervals. Unfortunately, there are cases when the measured value (PV yield in watts) is suddenly 0 or very high. The values before and after are correct:

My goal is to identify these 'outliers' and (in a second step) calculate the mean of the previous and next value to fix the measured value. I've experimented with two approaches so far, but am receiving many 'outliers' which are not measurement-errors. Hence, I am looking for better approaches.

Try 1: Classic outlier detection with IQR Source

def updateOutliersIQR(group):

Q1 = group.yield.quantile(0.25)

Q3 = group.yield.quantile(0.75)

IQR = Q3 - Q1

outliers = (group.yield < (Q1 - 1.5 * IQR)) | (group.yield > (Q3 + 1.5 * IQR))

print(outliers[outliers == True])

# calling the function on a per-day level

df.groupby(df.index.date).apply(updateOutliers)

Try 2: kernel density estimation Source

def updateOutliersKDE(group):

a = 0.9

r = group.yield.rolling(3, min_periods=1, win_type='parzen').sum()

n = r.max()

outliers = (r > n*a)

print(outliers[outliers == True])

# calling the function on a per-day level

df.groupby(df.index.date).apply(updateOutliers)

Try 3: Median Filter Source (As suggested by Jonnor)

def median_filter(num_std=3):

def _median_filter(x):

_median = np.median(x)

_std = np.std(x)

s = x[-3]

if (s >= _median - num_std * _std and s <= _median + num_std * _std):

return s

else:

return _median

return _median_filter

# calling the function

df.yield.rolling(5, center=True).apply(median_filter(2), raw=True)

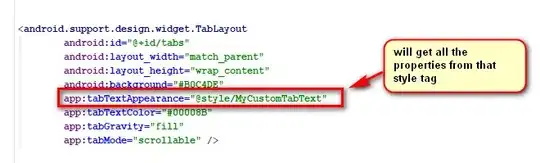

Edit: with try 3 and a window of 5 and std of 3, it finally catches the massive outlier, but will also loose accuracy of the other (non-faulty) sensor-measurements:

Are there any better approaches to detect the described 'outliers' or perform smoothing in timeseries data with the occasional sensor measurement issue?