I am trying to replicate the algorithm that is given in research paper regarding rating an image for a blur score

Please find below the function I have created. I have added the points in the comments on what I was trying to do.

def calculate_blur(image_name):

img_1 = cv2.imread(image_name) # Reading the Image

img_2 = np.fft.fft2(img_1) # Performing 2 dimensional fft on the image

img_3 = np.fft.fftshift(img_2) #findind fc by shifting origin of F to centre

img_4 = np.fft.ifftshift(img_3)

af=np.abs(img_4) #Calculating the absolute value of centred Fourier Transform

threshold=np.max(af)/1000# calculating the threshold value where the max value is calculated from absolute value

Th=np.sum(img_2>threshold) #total number of pixels in F/img_2 whose pixel value>threshold

fm=Th/(img_1.shape[0]*img_1.shape[1]) #calculating the image quality measure(fm)

if fm>0.05 : #Assuming fm>0.05 would be Not Blur (as I assumed from the results given in the research paper)

value='Not Blur'

else:

value='Blur'

return fm,value

I am seeing that when it is face closeup picture with appropriate light, even the images are blurry, the IQM score would be greater than 0.05 while for normal images(appropriate distance from the camera) that are clicked it is showing up good results.

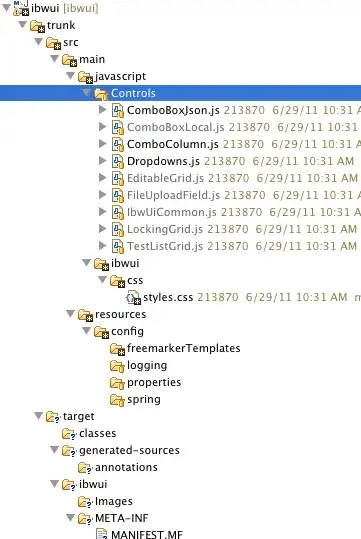

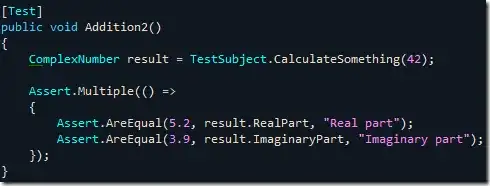

I am sharing 2 pictures.

This has score of (0.2822434750792747, 'Not Blur')

This has a score of (0.035472916666666666, 'Blur')`

I am trying to understand how exactly it is working in the backend i.e deciding between the two and how to enhance my function and detection.