I was wondering if there is a way to determine if an image is blurry or not by analyzing the image data.

-

7Related question that has a good answer, but also a more involved question formulation. http://stackoverflow.com/questions/5180327/detection-of-blur-in-images-video-sequences – Mr. Developerdude Jul 27 '12 at 18:34

12 Answers

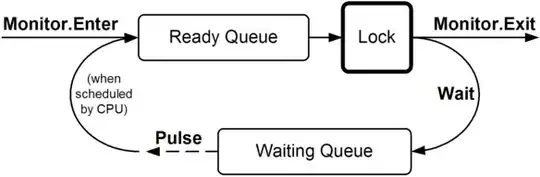

Another very simple way to estimate the sharpness of an image is to use a Laplace (or LoG) filter and simply pick the maximum value. Using a robust measure like a 99.9% quantile is probably better if you expect noise (i.e. picking the Nth-highest contrast instead of the highest contrast.) If you expect varying image brightness, you should also include a preprocessing step to normalize image brightness/contrast (e.g. histogram equalization).

I've implemented Simon's suggestion and this one in Mathematica, and tried it on a few test images:

The first test blurs the test images using a Gaussian filter with a varying kernel size, then calculates the FFT of the blurred image and takes the average of the 90% highest frequencies:

testFft[img_] := Table[

(

blurred = GaussianFilter[img, r];

fft = Fourier[ImageData[blurred]];

{w, h} = Dimensions[fft];

windowSize = Round[w/2.1];

Mean[Flatten[(Abs[

fft[[w/2 - windowSize ;; w/2 + windowSize,

h/2 - windowSize ;; h/2 + windowSize]]])]]

), {r, 0, 10, 0.5}]

Result in a logarithmic plot:

The 5 lines represent the 5 test images, the X axis represents the Gaussian filter radius. The graphs are decreasing, so the FFT is a good measure for sharpness.

This is the code for the "highest LoG" blurriness estimator: It simply applies an LoG filter and returns the brightest pixel in the filter result:

testLaplacian[img_] := Table[

(

blurred = GaussianFilter[img, r];

Max[Flatten[ImageData[LaplacianGaussianFilter[blurred, 1]]]];

), {r, 0, 10, 0.5}]

Result in a logarithmic plot:

The spread for the un-blurred images is a little better here (2.5 vs 3.3), mainly because this method only uses the strongest contrast in the image, while the FFT is essentially a mean over the whole image. The functions are also decreasing faster, so it might be easier to set a "blurry" threshold.

- 15,662

- 5

- 48

- 74

-

1What if I'm after the measure of the local blur. Namely, a Photo has areas where it is blurred and where it is sharp. I want to have a map which estimate the blur level per pixel. – Royi Nov 02 '12 at 07:49

-

5@Drazick: I'm not sure if that's even possible. For example, look at the Lena image: There are large areas where there's no contrast (e.g. Lena's skin) although the area is in focus. I can't think of a way to tell if such a smooth area is "blurry", or to distinguish it from an out-of-focus area. You should ask this as a separate question (maybe on DSP.SE). Maybe someone else has better ideas. – Niki Nov 02 '12 at 08:28

-

2

-

@pparescasellas Would you be willing to share your implementations. I'd be curious to see them. – chappjc Jan 16 '14 at 02:30

-

-

@pparescasellas: why did you swapped the quadrants of dft image? Why do you place the areas with highest frequency into the middle? You can skip those 17 lines of the code. Also you forgot to write important hint that the code will fail if you pass non-grayscale image. But it will crash on line `kernelGauss.at

( 0, 1 ) = 0.125;` anyway. – John Boe Jun 19 '16 at 10:34 -

Should the thresholds also change with change in the camera ? E.g blur image might not be classified a blurred if taken from an HDR camera ? – Prakruti Aug 31 '17 at 06:31

-

In the `LoG` algorithm described above, you apply the `GaussianFilter` and then the `LaplacianGaussianFilter`... Wouldn't be enough to do just the `LaplacianGaussianFilter` only? And isn't `LaplacianGaussianFilter` equivalent to apply the `GaussianFilter` filter and then the Laplace convolution matrix? Just asking because the libraries I am using (Flutter) only allow to apply a 3x3 kernel size to an image. They also have the Gaussian filter method, but they don't have the `LaplacianOfGaussian` method – Nicolas Jun 11 '20 at 16:17

Yes, it is. Compute the Fast Fourier Transform and analyse the result. The Fourier transform tells you which frequencies are present in the image. If there is a low amount of high frequencies, then the image is blurry.

Defining the terms 'low' and 'high' is up to you.

Edit:

As stated in the comments, if you want a single float representing the blurryness of a given image, you have to work out a suitable metric.

nikie's answer provide such a metric. Convolve the image with a Laplacian kernel:

1

1 -4 1

1

And use a robust maximum metric on the output to get a number which you can use for thresholding. Try to avoid smoothing too much the images before computing the Laplacian, because you will only find out that a smoothed image is indeed blurry :-).

- 2,301

- 2

- 22

- 37

- 10,378

- 7

- 39

- 55

-

16

-

4Unless your image is cyclic, you will usually have sharp edges at the borders of the image that lead to very high frequencies – Niki Oct 14 '11 at 11:04

-

2you usually virtually extend your image to avoid this effect. you can also use small windows to compute local fft. – Simon Bergot Oct 14 '11 at 11:42

-

7Only one point that's hugely important is that you have to know (at least roughly) what your expected *pre-blurred* image (frequency) content was. This is true since the frequency spectrum is going to be that of the original image times that of the blurring filter. Thus, if the original image already had predominately low frequencies, how can you tell whether it was blurred? – Chris A. Oct 14 '11 at 14:16

-

3If you take a photo of a blank white chart you have no way of telling whether the image is blurry or not. I think the OP wants some absolute sharpness measurement. the preblurred image might not exist at all. You have to work a bit to come with a correct metric, but fft can help with this problem. In this perspective, nickie's answer is better than mine. – Simon Bergot Oct 14 '11 at 14:34

-

Two things about this answer: The "FFT" outputs I've seen are typically square, 2048×2048, symmetrical around the 45° line. I understand that the vertical axis is imaginary frequency, horizontal axis is real frequency, and pixel intensity is greater where there is more energy at the intersection of those frequencies. But just how do you perform these comparisons? Are the imaginary frequencies important? Second, re: the Laplacian: "use a robust maximum metric" means what, exactly? Apply a divisor so that the convolution pixel values don't peg at 255? (Or zero?) – Mike C Dec 12 '15 at 00:10

-

@Niki cc Simon i.e. ringing - which you can suppress with a suitable edge filter - Hamming etc. – jtlz2 Oct 05 '18 at 11:04

During some work with an auto-focus lens, I came across this very useful set of algorithms for detecting image focus. It's implemented in MATLAB, but most of the functions are quite easy to port to OpenCV with filter2D.

It's basically a survey implementation of many focus measurement algorithms. If you want to read the original papers, references to the authors of the algorithms are provided in the code. The 2012 paper by Pertuz, et al. Analysis of focus measure operators for shape from focus (SFF) gives a great review of all of these measure as well as their performance (both in terms of speed and accuracy as applied to SFF).

EDIT: Added MATLAB code just in case the link dies.

function FM = fmeasure(Image, Measure, ROI)

%This function measures the relative degree of focus of

%an image. It may be invoked as:

%

% FM = fmeasure(Image, Method, ROI)

%

%Where

% Image, is a grayscale image and FM is the computed

% focus value.

% Method, is the focus measure algorithm as a string.

% see 'operators.txt' for a list of focus

% measure methods.

% ROI, Image ROI as a rectangle [xo yo width heigth].

% if an empty argument is passed, the whole

% image is processed.

%

% Said Pertuz

% Abr/2010

if ~isempty(ROI)

Image = imcrop(Image, ROI);

end

WSize = 15; % Size of local window (only some operators)

switch upper(Measure)

case 'ACMO' % Absolute Central Moment (Shirvaikar2004)

if ~isinteger(Image), Image = im2uint8(Image);

end

FM = AcMomentum(Image);

case 'BREN' % Brenner's (Santos97)

[M N] = size(Image);

DH = Image;

DV = Image;

DH(1:M-2,:) = diff(Image,2,1);

DV(:,1:N-2) = diff(Image,2,2);

FM = max(DH, DV);

FM = FM.^2;

FM = mean2(FM);

case 'CONT' % Image contrast (Nanda2001)

ImContrast = inline('sum(abs(x(:)-x(5)))');

FM = nlfilter(Image, [3 3], ImContrast);

FM = mean2(FM);

case 'CURV' % Image Curvature (Helmli2001)

if ~isinteger(Image), Image = im2uint8(Image);

end

M1 = [-1 0 1;-1 0 1;-1 0 1];

M2 = [1 0 1;1 0 1;1 0 1];

P0 = imfilter(Image, M1, 'replicate', 'conv')/6;

P1 = imfilter(Image, M1', 'replicate', 'conv')/6;

P2 = 3*imfilter(Image, M2, 'replicate', 'conv')/10 ...

-imfilter(Image, M2', 'replicate', 'conv')/5;

P3 = -imfilter(Image, M2, 'replicate', 'conv')/5 ...

+3*imfilter(Image, M2, 'replicate', 'conv')/10;

FM = abs(P0) + abs(P1) + abs(P2) + abs(P3);

FM = mean2(FM);

case 'DCTE' % DCT energy ratio (Shen2006)

FM = nlfilter(Image, [8 8], @DctRatio);

FM = mean2(FM);

case 'DCTR' % DCT reduced energy ratio (Lee2009)

FM = nlfilter(Image, [8 8], @ReRatio);

FM = mean2(FM);

case 'GDER' % Gaussian derivative (Geusebroek2000)

N = floor(WSize/2);

sig = N/2.5;

[x,y] = meshgrid(-N:N, -N:N);

G = exp(-(x.^2+y.^2)/(2*sig^2))/(2*pi*sig);

Gx = -x.*G/(sig^2);Gx = Gx/sum(Gx(:));

Gy = -y.*G/(sig^2);Gy = Gy/sum(Gy(:));

Rx = imfilter(double(Image), Gx, 'conv', 'replicate');

Ry = imfilter(double(Image), Gy, 'conv', 'replicate');

FM = Rx.^2+Ry.^2;

FM = mean2(FM);

case 'GLVA' % Graylevel variance (Krotkov86)

FM = std2(Image);

case 'GLLV' %Graylevel local variance (Pech2000)

LVar = stdfilt(Image, ones(WSize,WSize)).^2;

FM = std2(LVar)^2;

case 'GLVN' % Normalized GLV (Santos97)

FM = std2(Image)^2/mean2(Image);

case 'GRAE' % Energy of gradient (Subbarao92a)

Ix = Image;

Iy = Image;

Iy(1:end-1,:) = diff(Image, 1, 1);

Ix(:,1:end-1) = diff(Image, 1, 2);

FM = Ix.^2 + Iy.^2;

FM = mean2(FM);

case 'GRAT' % Thresholded gradient (Snatos97)

Th = 0; %Threshold

Ix = Image;

Iy = Image;

Iy(1:end-1,:) = diff(Image, 1, 1);

Ix(:,1:end-1) = diff(Image, 1, 2);

FM = max(abs(Ix), abs(Iy));

FM(FM<Th)=0;

FM = sum(FM(:))/sum(sum(FM~=0));

case 'GRAS' % Squared gradient (Eskicioglu95)

Ix = diff(Image, 1, 2);

FM = Ix.^2;

FM = mean2(FM);

case 'HELM' %Helmli's mean method (Helmli2001)

MEANF = fspecial('average',[WSize WSize]);

U = imfilter(Image, MEANF, 'replicate');

R1 = U./Image;

R1(Image==0)=1;

index = (U>Image);

FM = 1./R1;

FM(index) = R1(index);

FM = mean2(FM);

case 'HISE' % Histogram entropy (Krotkov86)

FM = entropy(Image);

case 'HISR' % Histogram range (Firestone91)

FM = max(Image(:))-min(Image(:));

case 'LAPE' % Energy of laplacian (Subbarao92a)

LAP = fspecial('laplacian');

FM = imfilter(Image, LAP, 'replicate', 'conv');

FM = mean2(FM.^2);

case 'LAPM' % Modified Laplacian (Nayar89)

M = [-1 2 -1];

Lx = imfilter(Image, M, 'replicate', 'conv');

Ly = imfilter(Image, M', 'replicate', 'conv');

FM = abs(Lx) + abs(Ly);

FM = mean2(FM);

case 'LAPV' % Variance of laplacian (Pech2000)

LAP = fspecial('laplacian');

ILAP = imfilter(Image, LAP, 'replicate', 'conv');

FM = std2(ILAP)^2;

case 'LAPD' % Diagonal laplacian (Thelen2009)

M1 = [-1 2 -1];

M2 = [0 0 -1;0 2 0;-1 0 0]/sqrt(2);

M3 = [-1 0 0;0 2 0;0 0 -1]/sqrt(2);

F1 = imfilter(Image, M1, 'replicate', 'conv');

F2 = imfilter(Image, M2, 'replicate', 'conv');

F3 = imfilter(Image, M3, 'replicate', 'conv');

F4 = imfilter(Image, M1', 'replicate', 'conv');

FM = abs(F1) + abs(F2) + abs(F3) + abs(F4);

FM = mean2(FM);

case 'SFIL' %Steerable filters (Minhas2009)

% Angles = [0 45 90 135 180 225 270 315];

N = floor(WSize/2);

sig = N/2.5;

[x,y] = meshgrid(-N:N, -N:N);

G = exp(-(x.^2+y.^2)/(2*sig^2))/(2*pi*sig);

Gx = -x.*G/(sig^2);Gx = Gx/sum(Gx(:));

Gy = -y.*G/(sig^2);Gy = Gy/sum(Gy(:));

R(:,:,1) = imfilter(double(Image), Gx, 'conv', 'replicate');

R(:,:,2) = imfilter(double(Image), Gy, 'conv', 'replicate');

R(:,:,3) = cosd(45)*R(:,:,1)+sind(45)*R(:,:,2);

R(:,:,4) = cosd(135)*R(:,:,1)+sind(135)*R(:,:,2);

R(:,:,5) = cosd(180)*R(:,:,1)+sind(180)*R(:,:,2);

R(:,:,6) = cosd(225)*R(:,:,1)+sind(225)*R(:,:,2);

R(:,:,7) = cosd(270)*R(:,:,1)+sind(270)*R(:,:,2);

R(:,:,7) = cosd(315)*R(:,:,1)+sind(315)*R(:,:,2);

FM = max(R,[],3);

FM = mean2(FM);

case 'SFRQ' % Spatial frequency (Eskicioglu95)

Ix = Image;

Iy = Image;

Ix(:,1:end-1) = diff(Image, 1, 2);

Iy(1:end-1,:) = diff(Image, 1, 1);

FM = mean2(sqrt(double(Iy.^2+Ix.^2)));

case 'TENG'% Tenengrad (Krotkov86)

Sx = fspecial('sobel');

Gx = imfilter(double(Image), Sx, 'replicate', 'conv');

Gy = imfilter(double(Image), Sx', 'replicate', 'conv');

FM = Gx.^2 + Gy.^2;

FM = mean2(FM);

case 'TENV' % Tenengrad variance (Pech2000)

Sx = fspecial('sobel');

Gx = imfilter(double(Image), Sx, 'replicate', 'conv');

Gy = imfilter(double(Image), Sx', 'replicate', 'conv');

G = Gx.^2 + Gy.^2;

FM = std2(G)^2;

case 'VOLA' % Vollath's correlation (Santos97)

Image = double(Image);

I1 = Image; I1(1:end-1,:) = Image(2:end,:);

I2 = Image; I2(1:end-2,:) = Image(3:end,:);

Image = Image.*(I1-I2);

FM = mean2(Image);

case 'WAVS' %Sum of Wavelet coeffs (Yang2003)

[C,S] = wavedec2(Image, 1, 'db6');

H = wrcoef2('h', C, S, 'db6', 1);

V = wrcoef2('v', C, S, 'db6', 1);

D = wrcoef2('d', C, S, 'db6', 1);

FM = abs(H) + abs(V) + abs(D);

FM = mean2(FM);

case 'WAVV' %Variance of Wav...(Yang2003)

[C,S] = wavedec2(Image, 1, 'db6');

H = abs(wrcoef2('h', C, S, 'db6', 1));

V = abs(wrcoef2('v', C, S, 'db6', 1));

D = abs(wrcoef2('d', C, S, 'db6', 1));

FM = std2(H)^2+std2(V)+std2(D);

case 'WAVR'

[C,S] = wavedec2(Image, 3, 'db6');

H = abs(wrcoef2('h', C, S, 'db6', 1));

V = abs(wrcoef2('v', C, S, 'db6', 1));

D = abs(wrcoef2('d', C, S, 'db6', 1));

A1 = abs(wrcoef2('a', C, S, 'db6', 1));

A2 = abs(wrcoef2('a', C, S, 'db6', 2));

A3 = abs(wrcoef2('a', C, S, 'db6', 3));

A = A1 + A2 + A3;

WH = H.^2 + V.^2 + D.^2;

WH = mean2(WH);

WL = mean2(A);

FM = WH/WL;

otherwise

error('Unknown measure %s',upper(Measure))

end

end

%************************************************************************

function fm = AcMomentum(Image)

[M N] = size(Image);

Hist = imhist(Image)/(M*N);

Hist = abs((0:255)-255*mean2(Image))'.*Hist;

fm = sum(Hist);

end

%******************************************************************

function fm = DctRatio(M)

MT = dct2(M).^2;

fm = (sum(MT(:))-MT(1,1))/MT(1,1);

end

%************************************************************************

function fm = ReRatio(M)

M = dct2(M);

fm = (M(1,2)^2+M(1,3)^2+M(2,1)^2+M(2,2)^2+M(3,1)^2)/(M(1,1)^2);

end

%******************************************************************

A few examples of OpenCV versions:

// OpenCV port of 'LAPM' algorithm (Nayar89)

double modifiedLaplacian(const cv::Mat& src)

{

cv::Mat M = (Mat_<double>(3, 1) << -1, 2, -1);

cv::Mat G = cv::getGaussianKernel(3, -1, CV_64F);

cv::Mat Lx;

cv::sepFilter2D(src, Lx, CV_64F, M, G);

cv::Mat Ly;

cv::sepFilter2D(src, Ly, CV_64F, G, M);

cv::Mat FM = cv::abs(Lx) + cv::abs(Ly);

double focusMeasure = cv::mean(FM).val[0];

return focusMeasure;

}

// OpenCV port of 'LAPV' algorithm (Pech2000)

double varianceOfLaplacian(const cv::Mat& src)

{

cv::Mat lap;

cv::Laplacian(src, lap, CV_64F);

cv::Scalar mu, sigma;

cv::meanStdDev(lap, mu, sigma);

double focusMeasure = sigma.val[0]*sigma.val[0];

return focusMeasure;

}

// OpenCV port of 'TENG' algorithm (Krotkov86)

double tenengrad(const cv::Mat& src, int ksize)

{

cv::Mat Gx, Gy;

cv::Sobel(src, Gx, CV_64F, 1, 0, ksize);

cv::Sobel(src, Gy, CV_64F, 0, 1, ksize);

cv::Mat FM = Gx.mul(Gx) + Gy.mul(Gy);

double focusMeasure = cv::mean(FM).val[0];

return focusMeasure;

}

// OpenCV port of 'GLVN' algorithm (Santos97)

double normalizedGraylevelVariance(const cv::Mat& src)

{

cv::Scalar mu, sigma;

cv::meanStdDev(src, mu, sigma);

double focusMeasure = (sigma.val[0]*sigma.val[0]) / mu.val[0];

return focusMeasure;

}

No guarantees on whether or not these measures are the best choice for your problem, but if you track down the papers associated with these measures, they may give you more insight. Hope you find the code useful! I know I did.

- 13,911

- 4

- 55

- 72

-

-

@mans I normally use 3, 5, or 7 depending on the resolution of the image. If you find you are needing to go higher than that, you may want to look at downsampling the image. – mevatron Oct 03 '16 at 01:09

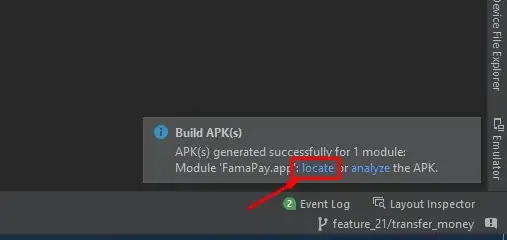

Building off of Nike's answer. Its straightforward to implement the laplacian based method with opencv:

short GetSharpness(char* data, unsigned int width, unsigned int height)

{

// assumes that your image is already in planner yuv or 8 bit greyscale

IplImage* in = cvCreateImage(cvSize(width,height),IPL_DEPTH_8U,1);

IplImage* out = cvCreateImage(cvSize(width,height),IPL_DEPTH_16S,1);

memcpy(in->imageData,data,width*height);

// aperture size of 1 corresponds to the correct matrix

cvLaplace(in, out, 1);

short maxLap = -32767;

short* imgData = (short*)out->imageData;

for(int i =0;i<(out->imageSize/2);i++)

{

if(imgData[i] > maxLap) maxLap = imgData[i];

}

cvReleaseImage(&in);

cvReleaseImage(&out);

return maxLap;

}

Will return a short indicating the maximum sharpness detected, which based on my tests on real world samples, is a pretty good indicator of if a camera is in focus or not. Not surprisingly, normal values are scene dependent but much less so than the FFT method which has to high of a false positive rate to be useful in my application.

- 7,333

- 1

- 25

- 36

-

What would be the threshhold value to say an image is bluryy? I have tested it. But its showing some varying results. Can you please help me out in this to set the threshold? – 2vision2 Feb 07 '13 at 06:28

-

Also tried your suggestion, but the numbers I get are a bit random. If I start a new question with regards to this particular implementation, would you care to take a look?\ – Stpn Jun 03 '13 at 19:28

-

@stpn The right threshold is scene dependent. In my application (CCTV) I'm using a default threshold of 300. For cameras where that is to low someone from support will change the configured value for that particular camera. – Yaur Jun 03 '13 at 21:32

-

-

We are looking for the highest contrast and since we are working with signed shorts -32767 is the lowest possible value. Its been 2.5 years since I wrote that code but IIRC I had issues using 16U. – Yaur Feb 28 '15 at 07:03

I came up with a totally different solution. I needed to analyse video still frames to find the sharpest one in every (X) frames. This way, I would detect motion blur and/or out of focus images.

I ended up using Canny Edge detection and I got VERY VERY good results with almost every kind of video (with nikie's method, I had problems with digitalized VHS videos and heavy interlaced videos).

I optimized the performance by setting a region of interest (ROI) on the original image.

Using EmguCV :

//Convert image using Canny

using (Image<Gray, byte> imgCanny = imgOrig.Canny(225, 175))

{

//Count the number of pixel representing an edge

int nCountCanny = imgCanny.CountNonzero()[0];

//Compute a sharpness grade:

//< 1.5 = blurred, in movement

//de 1.5 à 6 = acceptable

//> 6 =stable, sharp

double dSharpness = (nCountCanny * 1000.0 / (imgCanny.Cols * imgCanny.Rows));

}

- 3,714

- 3

- 38

- 62

-

Hey @Goladorak84, thanks for this. Can I ask you chose to multiple by 1000.0 inside dSharpness? – Roi Mulia Jan 13 '21 at 23:12

-

3this works amazing (the best one I tested in this question). Here is python implementation for those who need. `image_canny = cv2.Canny(image, 175, 225);nonzero_ratio = numpy.count_nonzero(image_canny) * 1000.0 / image_canny.size;return nonzero_ratio;` – smttsp Sep 14 '22 at 18:23

-

@RoiMulia If I remember, it was an arbitrary number based on trial and error. I should have given it a better name :) – Goldorak84 Sep 15 '22 at 12:36

Thanks nikie for that great Laplace suggestion. OpenCV docs pointed me in the same direction: using python, cv2 (opencv 2.4.10), and numpy...

gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)

numpy.max(cv2.convertScaleAbs(cv2.Laplacian(gray, 3)))

result is between 0-255. I found anything over 200ish is very in focus, and by 100, it's noticeably blurry. the max never really gets much under 20 even if it's completely blurred.

-

5I got 255 for 3 of my photos. And for one perfectly focused photo I got 108. So, I think the method's effectiveness depends on something. – WindRider Jun 02 '18 at 15:26

-

Agreed with @WindWider. Sample image where this fails is [this image](http://photos1.blogger.com/blogger/4886/2467/1600/23%20-%20Blurry%20Megan%20and%20Colosseum%20at%20night.jpg) I think the reason is that even though the image is shaky, the contrast of image and corresponding intensity differences between pixels is large , due to which Laplacian Values are relatively large. Please correct me if I am wrong. – Resham Wadhwa Jun 28 '18 at 08:59

-

-

1@ggez44 This is my preferred answer - but the value is a function of the number of pixels in the image. Do you know how this scales theoretically? I could ask it as a new question but is likely to get shot down. Thanks! – jtlz2 Feb 17 '20 at 07:37

One way which I'm currently using measures the spread of edges in the image. Look for this paper:

@ARTICLE{Marziliano04perceptualblur,

author = {Pina Marziliano and Frederic Dufaux and Stefan Winkler and Touradj Ebrahimi},

title = {Perceptual blur and ringing metrics: Application to JPEG2000,” Signal Process},

journal = {Image Commun},

year = {2004},

pages = {163--172} }

It's usually behind a paywall but I've seen some free copies around. Basically, they locate vertical edges in an image, and then measure how wide those edges are. Averaging the width gives the final blur estimation result for the image. Wider edges correspond to blurry images, and vice versa.

This problem belongs to the field of no-reference image quality estimation. If you look it up on Google Scholar, you'll get plenty of useful references.

EDIT

Here's a plot of the blur estimates obtained for the 5 images in nikie's post. Higher values correspond to greater blur. I used a fixed-size 11x11 Gaussian filter and varied the standard deviation (using imagemagick's convert command to obtain the blurred images).

If you compare images of different sizes, don't forget to normalize by the image width, since larger images will have wider edges.

Finally, a significant problem is distinguishing between artistic blur and undesired blur (caused by focus miss, compression, relative motion of the subject to the camera), but that is beyond simple approaches like this one. For an example of artistic blur, have a look at the Lenna image: Lenna's reflection in the mirror is blurry, but her face is perfectly in focus. This contributes to a higher blur estimate for the Lenna image.

- 21,621

- 10

- 84

- 126

Answers above elucidated many things, but I think it is useful to make a conceptual distinction.

What if you take a perfectly on-focus picture of a blurred image?

The blurring detection problem is only well posed when you have a reference. If you need to design, e.g., an auto-focus system, you compare a sequence of images taken with different degrees of blurring, or smoothing, and you try to find the point of minimum blurring within this set. I other words you need to cross reference the various images using one of the techniques illustrated above (basically--with various possible levels of refinement in the approach--looking for the one image with the highest high-frequency content).

- 2,392

- 18

- 29

-

2In other words it's a relative notion, it's only possible to tell if an image is more or less blurred than another similar image. i.e. if it has more or less high frequency content in its FFT. Particular case : what if the image has adjacent pixels with the maximum and minimum luminosity ? For example a completely black pixel next to a completely white pixel. In this case it's a perfect focus, else there would be a smoother transition from black to white. Perfect focus not likely in photography, but the question doesn't specify the source of the image (it could be computer generated). – Ben Jan 13 '16 at 22:53

I tried solution based on Laplacian filter from this post. It didn't help me. So, I tried the solution from this post and it was good for my case (but is slow):

import cv2

image = cv2.imread("test.jpeg")

height, width = image.shape[:2]

gray = cv2.cvtColor(image, cv2.COLOR_BGR2GRAY)

def px(x, y):

return int(gray[y, x])

sum = 0

for x in range(width-1):

for y in range(height):

sum += abs(px(x, y) - px(x+1, y))

Less blurred image has maximum sum value!

You can also tune speed and accuracy by changing step, e.g.

this part

for x in range(width - 1):

you can replace with this one

for x in range(0, width - 1, 10):

- 2,152

- 1

- 23

- 28

-

for those who tries to use this code: it is not efficient btw. as far as I understood, you shift horizontally by 1 pixel and subtract it. find the sum of absolute difference. you can do it as `gray_f = float(gray); diff = gray_f[:-1, :] - gray_f[1:, :]; sum(np.abs(diff));`. I didn't test this but should be something like this which would speed up the code a lot. – smttsp Sep 13 '22 at 22:46

Matlab code of two methods that have been published in highly regarded journals (IEEE Transactions on Image Processing) are available here: https://ivulab.asu.edu/software

check the CPBDM and JNBM algorithms. If you check the code it's not very hard to be ported and incidentally it is based on the Marzialiano's method as basic feature.

- 2,389

- 2

- 16

- 19

i implemented it use fft in matlab and check histogram of the fft compute mean and std but also fit function can be done

fa = abs(fftshift(fft(sharp_img)));

fb = abs(fftshift(fft(blured_img)));

f1=20*log10(0.001+fa);

f2=20*log10(0.001+fb);

figure,imagesc(f1);title('org')

figure,imagesc(f2);title('blur')

figure,hist(f1(:),100);title('org')

figure,hist(f2(:),100);title('blur')

mf1=mean(f1(:));

mf2=mean(f2(:));

mfd1=median(f1(:));

mfd2=median(f2(:));

sf1=std(f1(:));

sf2=std(f2(:));

- 8,605

- 39

- 41

- 68

- 362

- 4

- 11

That's what I do in Opencv to detect focus quality in a region:

Mat grad;

int scale = 1;

int delta = 0;

int ddepth = CV_8U;

Mat grad_x, grad_y;

Mat abs_grad_x, abs_grad_y;

/// Gradient X

Sobel(matFromSensor, grad_x, ddepth, 1, 0, 3, scale, delta, BORDER_DEFAULT);

/// Gradient Y

Sobel(matFromSensor, grad_y, ddepth, 0, 1, 3, scale, delta, BORDER_DEFAULT);

convertScaleAbs(grad_x, abs_grad_x);

convertScaleAbs(grad_y, abs_grad_y);

addWeighted(abs_grad_x, 0.5, abs_grad_y, 0.5, 0, grad);

cv::Scalar mu, sigma;

cv::meanStdDev(grad, /* mean */ mu, /*stdev*/ sigma);

focusMeasure = mu.val[0] * mu.val[0];

- 1,625

- 1

- 17

- 15