1.

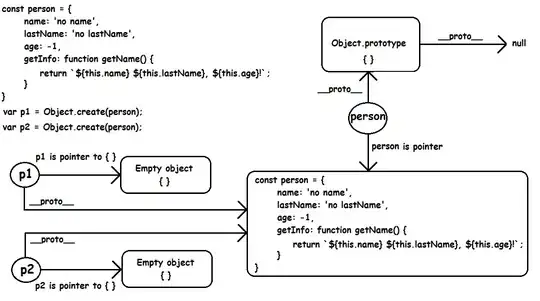

Given the "ROI" supervision you have, I strongly recommend you to explore GrabCut (as proposed by YoniChechnik):

Rother C, Kolmogorov V, Blake A. "GrabCut" interactive foreground extraction using iterated graph cuts. ACM transactions on graphics (TOG). 2004.

To get a feeling of how this works, you can use power-point's "background removal" tool:

which is based on GrabCut algorithm.

This is how it looks in power point:

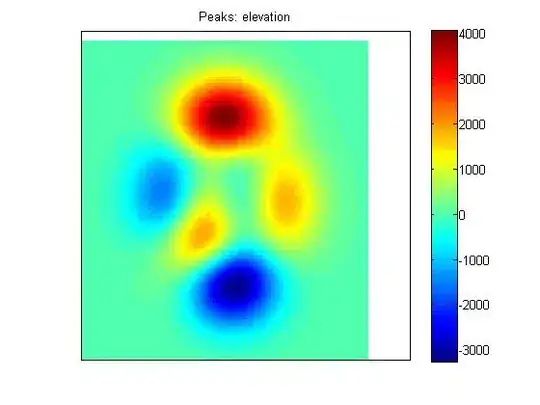

GrabCut segment the foreground object in a selected ROI mainly based on its foreground/background color distributions, and less on edge/boundary information though this extra information can be integrated into the formulation.

It seems like opencv has a basic implementation of GrabCut, see here.

2.

If you are seeking a method that uses only the boundary information, you might find this answer useful.

3.

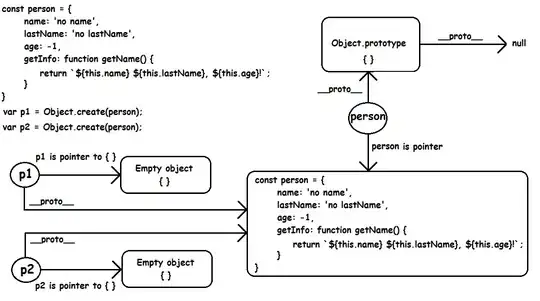

An alternative method is to use NCuts:

Shi J, Malik J. Normalized cuts and image segmentation. IEEE Transactions on pattern analysis and machine intelligence. 2000.

If you have very reliable edge map, you can modify the "affinity matrix" NCuts works with to be a binary matrix

0 if there is a boundary between i and j

w_ij = 1 if there is no boundary between i and j

0 if i and j are not neighbors of each other

NCuts can be viewed as a way to estimate "robust connected components".