I am using apache spark (2.4.4) with yarn for better job scheduling and enabling multiple concurrent jobs. But my job is continuously in ACCEPTED state and not moving forward.

My cluster consists of 10 nodes (1 master and 9 slaves). 8 CPUs and 16 GB memory per node. Yarn is working perfectly fine and can see the nodes in the UI and no unhealthy nodes, but not sure the jobs are in ACCEPTED state for long time and getting FAILED after some point of time. I read through different articles and everywhere it is mentioned that "Yarn doesn't have enough resources to allocate". But my cluster is fairly big and I am running a small job. Any help in this would be appreciated.

yarn-site.xml

<configuration>

<property>

<description>The hostname of the RM.</description>

<name>yarn.resourcemanager.hostname</name>

<value>${hostname}</value>

</property>

<property>

<name>yarn.scheduler.minimum-allocation-mb</name>

<value>1024</value>

</property>

<property>

<name>yarn.resourcemanager.scheduler.class</name>

<value>org.apache.hadoop.yarn.server.resourcemanager.scheduler.fair.FairScheduler</value>

</property>

<property>

<name>yarn.nodemanager.resource.memory-mb</name>

<value>12288</value>

</property>

<property>

<name>yarn.scheduler.maximum-allocation-mb</name>

<value>12288</value>

</property>

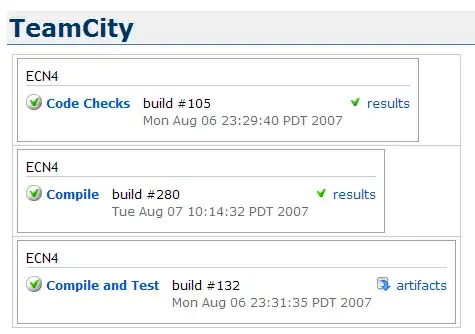

</configuration>Yarn UI

Applications

Fair scheduler options

<?xml version="1.0" encoding="UTF-8"?>

<allocations>

<defaultQueueSchedulingPolicy>fair</defaultQueueSchedulingPolicy>

<queue name="prod">

<weight>40</weight>

<schedulingPolicy>fifo</schedulingPolicy>

</queue>

<queue name="dev">

<weight>60</weight>

<queue name="eng" />

<queue name="science" />

</queue>

<queuePlacementPolicy>

<rule name="specified" create="false" />

<rule name="primaryGroup" create="false" />

<rule name="default" queue="dev.eng" />

</queuePlacementPolicy>

</allocations>Spark submit command

./bin/spark-submit --class Main --master yarn --deploy-mode cluster --driver-memory 1g --executor-memory 1g aggregator-1.0-SNAPSHOT.jar