In databricks runtime version 6.6 I am able to successfully run a shell command like the following:

%sh ls /dbfs/FileStore/tables

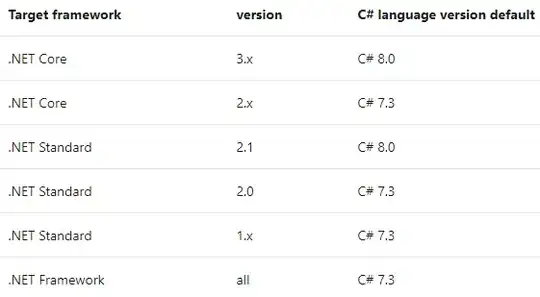

However, in runtime version 7, this no longer works. Is there any way to directly access /dbfs/FileStore in runtime version 7? I need to run commands to unzip a parquet zip file in /dbfs/FileStore/tables. This used to work in version 6.6 but databricks new "upgrade" breaks this simple core functionality.

Not sure if this matters but I am using the community edition of databricks.