I have an Emr cluster for spark with below configuration of 2 Instances.

r4.2xlarge

8 vCore

So my total vCores is 16 and the same is reflected in yarn Vcores

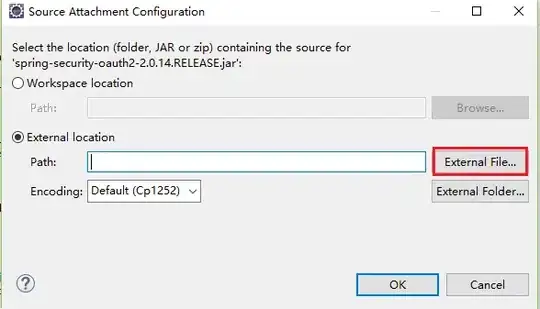

I have submitted a spark streaming job with parameters --num-executors 2 --executor-cores 5. So I was assuming it will use up 2*5 total 10 vcores for executors, but what it's doing only using 2 cores in total from the cluster (+1 for the driver)

And in spark, the job is still running with parallel tasks of 10 (2*5). Seems like it's just running only 5 threads within each executor core.

I have read in different questions and in documentation --executor-cores uses actual vCores but here, it only running tasks as threads. Is my understanding correct here?