I wanted to generate datasets for perception and grasping based on the physics engine. I tried importing following 3D models released by google research recently ( https://app.ignitionrobotics.org/GoogleResearch ) into drake and creating the segmentation dataset for a variety of shoes i.e drop meshes into the bin / let it come to rest and read the rgb / depth and segmentation images.

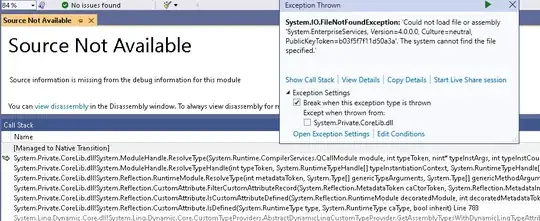

However, specifying the object meshes (.obj files) as collision geometry in drake doesn't seem to work since the shoes just penetrate through the bin and keep falling into the abyss (attached snapshot).

I also noticed that YCB objects have collision geometry described using simple boxes and point contacts. Here is the visualization of the same (as you may be already familiar). Green is the collision geometry.

If I have to simulate the above do I need to describe simple geometry for all objects from google research dataset ? If so, how were they generated ? Are there some tools one uses to generate this or it was done manually ? or should I enable the hydroelastic contact simulation for this to work when meshes are used for collision geometry ?

If it can handle any convex mesh, an alternative is to make a convex hull of the original mesh as collision geometry.

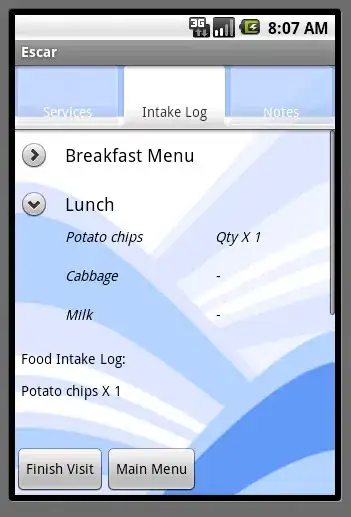

Also as an alternative I tried the same with pybullet. Instead of the bin, I used a plane. Pybullet seems to be handling specifying the mesh as collision geometry correctly through. Here is the snapshot of the data in pybullet.

An intermediate solution (doesn't address the grasping part yet):

After discussion with Sean & Russ, I created convex hull of the triangle mesh (using open3D) and it appears that using this convex hull as the collision mesh and <drake:declare_convex/> annotation to mesh tag, the shoes come to stable pose. I think this solution is good enough for me for generating the perception data using drake. Here is the snapshot after using the solution below: