I recently discovered this amazing library for ML interpretability. I decided to build a simple xgboost classifier using a toy dataset from sklearn and to draw a force_plot.

To understand the plot the library says:

The above explanation shows features each contributing to push the model output from the base value (the average model output over the training dataset we passed) to the model output. Features pushing the prediction higher are shown in red, those pushing the prediction lower are in blue (these force plots are introduced in our Nature BME paper).

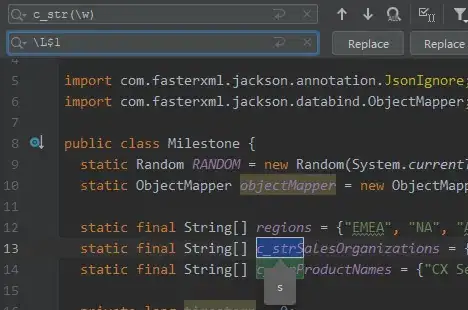

So it looks to me as the base_value should be the same as clf.predict(X_train).mean()which equals 0.637. However this is not the case when looking at the plot, the number is actually not even within [0,1]. I tried doing the log in different basis (10, e, 2) assuming it would be some kind of monotonic transformation... but still not luck. How can I get to this base_value?

!pip install shap

from sklearn.datasets import load_breast_cancer

from sklearn.model_selection import train_test_split

from sklearn.ensemble import GradientBoostingClassifier

import pandas as pd

import shap

X, y = load_breast_cancer(return_X_y=True)

X = pd.DataFrame(data=X)

y = pd.DataFrame(data=y)

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=0)

clf = GradientBoostingClassifier(random_state=0)

clf.fit(X_train, y_train)

print(clf.predict(X_train).mean())

# load JS visualization code to notebook

shap.initjs()

explainer = shap.TreeExplainer(clf)

shap_values = explainer.shap_values(X_train)

# visualize the first prediction's explanation (use matplotlib=True to avoid Javascript)

shap.force_plot(explainer.expected_value, shap_values[0,:], X_train.iloc[0,:])