I am trying to export my dataframe to sql database (Postgres).

I created the table as following:

CREATE TABLE dataops.OUTPUT

(

ID_TAIL CHAR(30) NOT NULL,

ID_MODEL CHAR(30) NOT NULL,

ID_FIN CHAR(30) NOT NULL,

ID_GROUP_FIN CHAR(30) NOT NULL,

ID_COMPONENT CHAR(30) NOT NULL,

DT_OPERATION TIMESTAMP NOT NULL,

DT_EXECUTION TIMESTAMP NOT NULL,

FT_VALUE_SENSOR FLOAT NOT NULL,

DT_LOAD TIMESTAMP NOT NULL

);

And I want to write this dataframe into that sql table:

conn = sqlalchemy.create_engine("postgres://root:1234@localhost:5432/postgres")

data = [['ID_1', 'A4_DOOUE_ADM001', '1201MJ52', 'PATH_1', 'LATCHED1AFT',

'2016-06-22 19:10:25', '2020-11-12 17:20:33.616016', 2.9, '2020-11-12 17:54:06.340735']]

output_df=pd.DataFrame(data,columns=["id_tail", "id_model", "id_fin", "id_group_fin", "id_component", "dt_operation",

"dt_execution", "ft_value_sensor", "dt_load"])

But, when I run the command to write into database output_df.to_sql I realize that a new table "OUTPUT", with double qupotes has been created with the data inserted.

output_df.to_sql(cfg.table_names["output_rep27"], conn, cfg.db_parameters["schema"], if_exists='append',index=False)

This is what I see in my DDBB:

But the same table without quotes is empty:

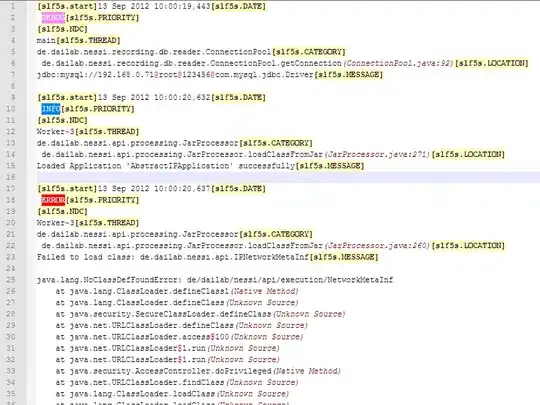

When you purposely try to insert the table wrong (changing a column name for example) you see that pandas is inserting with double quotes because the error:

How to avoid pandas inserts with double quotes for the table?