What is the fastest, easiest tool or method to convert text files between character sets?

Specifically, I need to convert from UTF-8 to ISO-8859-15 and vice versa.

Everything goes: one-liners in your favorite scripting language, command-line tools or other utilities for OS, web sites, etc.

Best solutions so far:

On Linux/UNIX/OS X/cygwin:

Gnu iconv suggested by Troels Arvin is best used as a filter. It seems to be universally available. Example:

$ iconv -f UTF-8 -t ISO-8859-15 in.txt > out.txtAs pointed out by Ben, there is an online converter using iconv.

recode (manual) suggested by Cheekysoft will convert one or several files in-place. Example:

$ recode UTF8..ISO-8859-15 in.txtThis one uses shorter aliases:

$ recode utf8..l9 in.txtRecode also supports surfaces which can be used to convert between different line ending types and encodings:

Convert newlines from LF (Unix) to CR-LF (DOS):

$ recode ../CR-LF in.txtBase64 encode file:

$ recode ../Base64 in.txtYou can also combine them.

Convert a Base64 encoded UTF8 file with Unix line endings to Base64 encoded Latin 1 file with Dos line endings:

$ recode utf8/Base64..l1/CR-LF/Base64 file.txt

On Windows with Powershell (Jay Bazuzi):

PS C:\> gc -en utf8 in.txt | Out-File -en ascii out.txt

(No ISO-8859-15 support though; it says that supported charsets are unicode, utf7, utf8, utf32, ascii, bigendianunicode, default, and oem.)

Edit

Do you mean iso-8859-1 support? Using "String" does this e.g. for vice versa

gc -en string in.txt | Out-File -en utf8 out.txt

Note: The possible enumeration values are "Unknown, String, Unicode, Byte, BigEndianUnicode, UTF8, UTF7, Ascii".

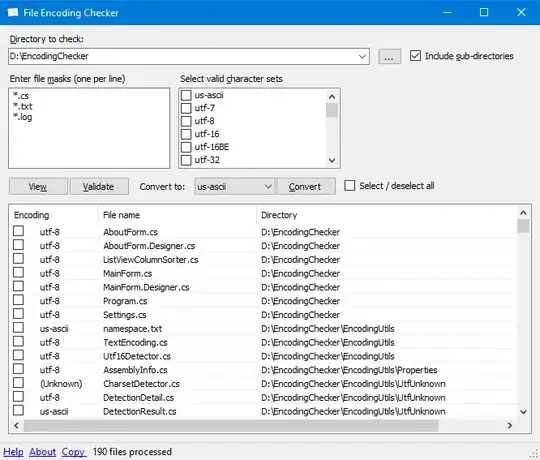

- CsCvt - Kalytta's Character Set Converter is another great command line based conversion tool for Windows.