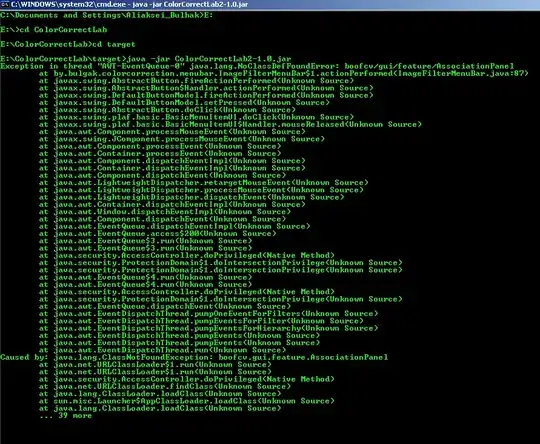

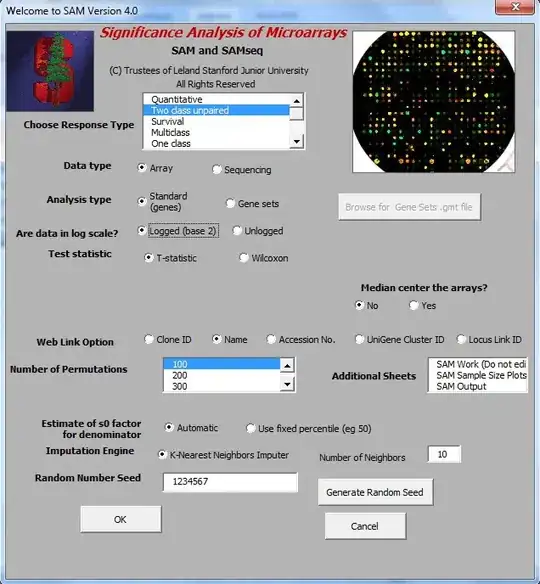

Here's a possible solution for your problem. I'm using mock screenshots, since, like I suggested, it is better to use lossless images to get a better result. The main idea here is to extract the color of the text box and to fill the rest of the image with that color, then threshold the image. By doing this, we will reduce the intensity variation and obtain a better thresholded image - since the image histogram will contain fewer intensity values. These are the steps:

- Crop the image to a ROI (Region Of Interest)

- Get the colors in that ROI via K-Means

- Get the color of the text box

- Flood-fill the ROI with the color of the text box

- Apply Otsu's thresholding to get a binary image

- Get OCR of the image

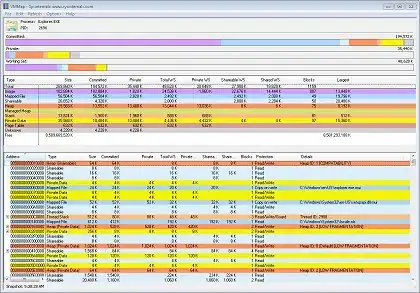

Suppose this is our test images, one uses a a "light" theme while the other uses a "dark" theme:

I'll be using pyocr as OCR engine. Let's use image one, the code would be this:

# imports:

from PIL import Image

import numpy as np

import cv2

import pyocr

import pyocr.builders

tools = pyocr.get_available_tools()

# The tools are returned in the recommended order of usage

tool = tools[0]

langs = tool.get_available_languages()

lang = langs[0]

# image path

path = "D://opencvImages//"

fileName = "mockText.png"

# Reading an image in default mode:

inputImage = cv2.imread(path + fileName)

# Convert RGB to grayscale:

grayscaleImage = cv2.cvtColor(inputImage, cv2.COLOR_BGR2GRAY)

# Set the ROI location:

roiX = 0

roiY = 235

roiWidth = 750

roiHeight = 1080

# Crop the ROI:

smsROI = grayscaleImage[roiY:roiHeight, roiX:roiWidth]

The first bit crops the ROI - everything that is of interest, leaving out the "header" and the "footer" of the image, where's there's info that we really don't need. This is the current ROI:

Wouldn't be nice to (approximately) get all the colors used in the image? Fortunately that's what Color Quantization gives us - a reduced pallet of the average colors present in an image, provided the number of the colors we are looking for. Let's apply K-Means and use 3 clusters to group this colors.

In our test images, most of the pixels are background - so, the largest cluster of pixels will belong to the background. The text represents the smallest cluster of pixels. That leaves the remaining cluster our target - the color of the text box. Let's apply K-Means, then. We need to format the data before, though, because K-Means needs float re-arranged arrays:

# Reshape the data to width x height, number of channels:

kmeansData = smsROI.reshape((-1,1))

# convert the data to np.float32

kmeansData = np.float32(kmeansData)

# define criteria, number of clusters(K) and apply kmeans():

criteria = (cv2.TERM_CRITERIA_EPS + cv2.TERM_CRITERIA_MAX_ITER, 5, 1.0)

# Define number of clusters (3 colors):

K = 3

# Run K-means:

_, _, center = cv2.kmeans(kmeansData, K, None, criteria, 10, cv2.KMEANS_RANDOM_CENTERS)

# Convert the centers to uint8:

center = np.uint8(center)

# Sort centers from small to largest:

center = sorted(center, reverse=False)

# Get text color and min color:

textBoxColor = int(center[1][0])

minColor = min(center)[0]

print("Minimum Color is: "+str(minColor))

print("Text Box Color is: "+str(textBoxColor))

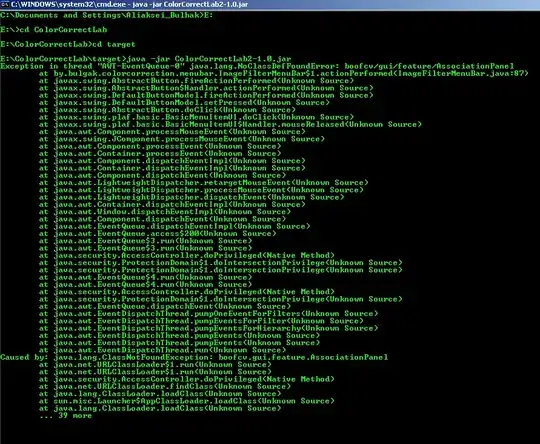

The info of interest is in center. That's where our colors are. After sorting this list and getting the minimum color value (that I'll use later to distinguish between a light and a dark theme) we can print the values. For the first test image, these values are:

Minimum Color is: 23

Text Box Color is: 225

Alright, so far so good. We have the color of the text box. Let's use that and flood-fill the entire ROI at position (x=0, y=0):

# Apply flood-fill at seed point (0,0):

cv2.floodFill(smsROI, mask=None, seedPoint=(0, 0), newVal=textBoxColor)

The result is this:

Very nice. Let's apply Otsu's thresholding on this bad boy:

# Threshold via Otsu:

_, binaryImage = cv2.threshold(smsROI, 0, 255, cv2.THRESH_BINARY + cv2.THRESH_OTSU)

Now, here comes the minColor part. If you are processing a dark theme screenshot and threshold it you will get white text on black background. If you were to process a light theme screenshot you would get black text on white background. We will always produce the same no matter the input: white text and black background. Let's check the min color, if this equals 0 (black) you just received a dark theme screenshot and you don't need to invert the image. Otherwise, invert the image:

# Process "Dark Theme / Light Theme":

if minColor != 0:

# Invert image if is not already inverted:

binaryImage = 255 - binaryImage

cv2.imshow("binaryImage", binaryImage)

cv2.waitKey(0)

For our first test image, the result is:

Notice the little bits of small noise. Let's apply an area filter (function defined at the end of the post) to get rid of pixels below a certain area threshold:

# Run a minimum area filter:

minArea = 10

binaryImage = areaFilter(minArea, binaryImage)

This is the filtered image:

Very nice. Lastly, I write this image and use pyocr to get the text as a string:

cv2.imwrite(path + "ocrText.png", binaryImage)

txt = tool.image_to_string(

Image.open(path + "ocrText.png"),

lang=lang,

builder=pyocr.builders.TextBuilder()

)

print("Image text is: "+txt)

Which results in:

Image text is: 301248 is your Amazon

verification code

If you test the second image you get the same exact result. This is the definition and implementation of the areaFilter function:

def areaFilter(minArea, inputImage):

# Perform an area filter on the binary blobs:

componentsNumber, labeledImage, componentStats, componentCentroids = \

cv2.connectedComponentsWithStats(inputImage, connectivity=4)

# Get the indices/labels of the remaining components based on the area stat

# (skip the background component at index 0)

remainingComponentLabels = [i for i in range(1, componentsNumber) if componentStats[i][4] >= minArea]

# Filter the labeled pixels based on the remaining labels,

# assign pixel intensity to 255 (uint8) for the remaining pixels

filteredImage = np.where(np.isin(labeledImage, remainingComponentLabels) == True, 255, 0).astype('uint8')

return filteredImage