I am trying to stitching images using this blog Image Panorama Stitching with OpenCV

# Apply panorama correction

width = trainImg.shape[1] + queryImg.shape[1]

height = trainImg.shape[0] + queryImg.shape[0]

result = cv2.warpPerspective(trainImg, H, (width, height))

result[0:queryImg.shape[0], 0:queryImg.shape[1]] = queryImg

Here's Thalles Silva complete code for Image Panorama Stitching with OpenCV

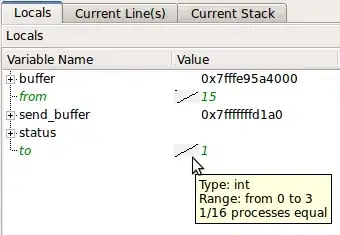

I would like to know how much of the area of the images actual overlap like in this post OpenCV determine area of intersect/overlap

Where and how should I alter Thalles Silva complete code to get the area of intersection, confused?