I am trying to align an RGB image with an IR image (single channel).

The goal is to create a 4 channel image R,G,B,IR.

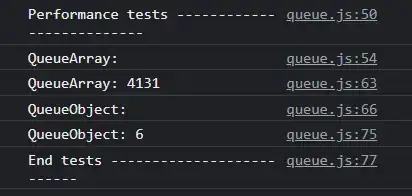

In order to do this, I am using cv2.findTransformECC as described in this very neat guide. The code is unchanged for now, except for line 13 where the Motion is set to Euclidian because I want to handle rotations in the future. I am using Python.

In order to verify the workings of the software, I used the images from the guide. It worked well so I wanted to correlate satellite images from multiple spectra as described above. Unfortunately, I ran into problems here.

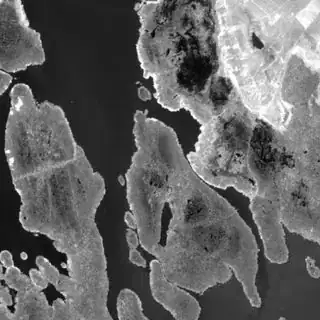

Sometimes the algorithm converged (after ages) and sometimes it immediately crashed because it cant converge and other times it "finds" a solution that is clearly wrong. Attached you find two images that, from a human perspective, are easy to match, but the algorithm fails. The images are not rotated in any way, they are just not the exact same image (check the borders), so a translational motion is expected. Images are of Lake Neusiedlersee in Austria, the source is Sentinelhub.

Edit: With "sometimes" I refer to using different images from Sentinel. One pair of images has consistently the same outcome.

I know that ECC is not feature-based which might pose a problem here.

I have also read that it is somewhat dependent on the initial warp matrix.

My questions are:

- Am I using

cv2.findTransformECCwrong? - Is there a better way to do this?

- Should I try to "Monte-Carlo" the initial matrices until it converges? (This feels wrong)

- Do you suggest using a feature-based algorithm?

- If so, is there one available or would I have to implement this myself?

Thanks for the help!