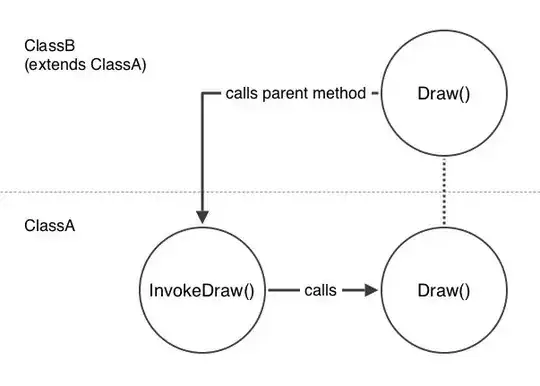

In my previous question I was trying to match multispectral images from satellite images to create a 4 channel image (R,G,B,IR). It seemed that ECC was not the right way to go. A comment suggested using Feature-based matching, something I was in the process of trying while waiting for some replies on the previous question.

The results are... absolutely stunning to me. I hope you guys can help me find my mistake since this, IMHO, has to be a configuration problem on my side. SIFT can't be performing that badly.

Setup

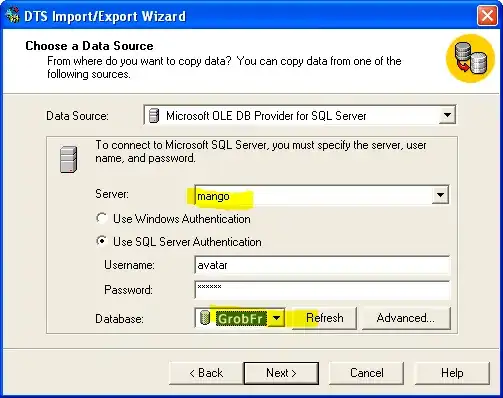

I used the python code from this guide. Only lines that changed are line 15 and 20, they are:

orb = cv2.SIFT_create(MAX_FEATURES) #line 15

matcher = cv2.BFMatcher(cv2.NORM_L2,crossCheck=False) #line 20

I intentionally did not change the name orb in order to keep the rest of the code as close to the original as possible.

So we are ready to overlay the images. Let's go!

All images I used are Satellite Images from SentinelHub.

It is expected that Images are mostly rotated and translated towards each other, no change in perspective shall be considered here (thus a Euclidian Motion is expected)

Usage and Results

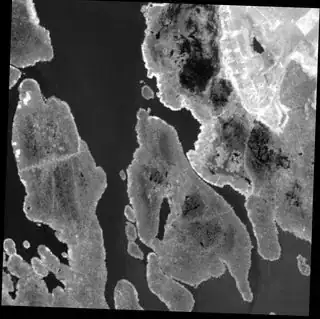

Initially, I used these images (all images in this post will be rather small because I will use quite a few): The IR Image is in fact slightly rotated so the result looks good!

So far so good right? Well... no. Look at the matches. It really seems like the luck of a million years aligns these two images. These are the 15% best matches!!!!

What do you do at this point? Rerun with different images.

So I did with the following set of images and I saw what I expected. (In short just the matches and the alignment)

I expected this to happen after the first set of images. Keep in mind these are again the 15% best matches (I tried different numbers, didn't change anything)

To check my sanity I then also tried to match the red channel with the rest of the RGB image. That worked and looked as expected. I even spun the Image slightly to throw a little challenge at it and it worked.

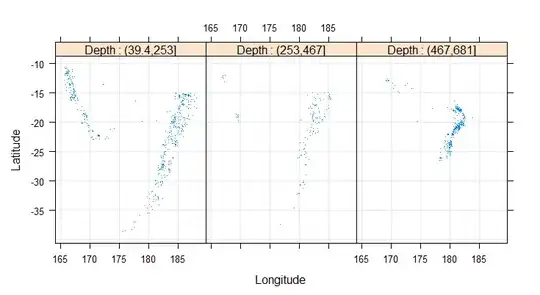

Aftermath Sooo... I did this with more images than you can see here. I always used Images of lakes and lakes shores because I think, for a features-based matching algorithm, a shoreline should provide a great baseline. Somehow that does not seem to help at all. I really don't know what to do or say at this point because the images are full of features that, for a human, are easy to correlate. However, it seems that SIFT has an insanely hard time matching RGB to IR images and I don't really understand why.

Questions

- Any ideas about what's wrong here?

- Is SIFT not suited for this use case?

- Is something configured the wrong way?

Edit: I have been asked to add on some images that weren't matchable, so here they come :) The previous ones were not uploaded due to them breaching the 2MB limit. You can obtain your own here. Short tutorial:

- Take TrueColor Screenshot (yes screenshot for now)

- Click on "Custom"

- Choose all Channels as "8" (its drag and drop)

- Take another screenshot of roughly the same area