Although I know that SARSA is on-policy while Q-learning is off-policy, when looking at their formulas it's hard (to me) to see any difference between these two algorithms.

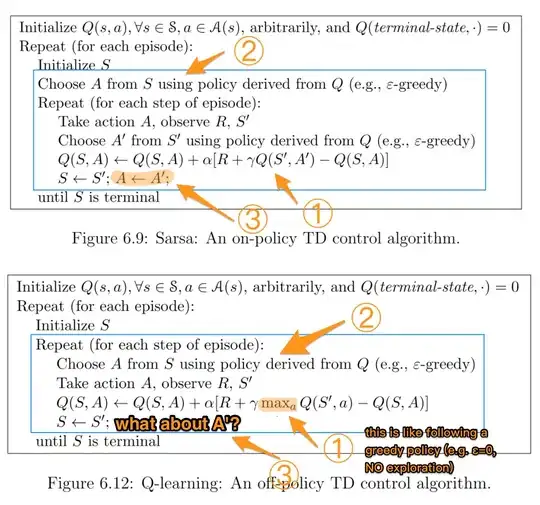

According to the book Reinforcement Learning: An Introduction (by Sutton and Barto). In the SARSA algorithm, given a policy, the corresponding action-value function Q (in the state s and action a, at timestep t), i.e. Q(st, at), can be updated as follows

Q(st, at) = Q(st, at) + α*(rt + γ*Q(st+1, at+1) - Q(st, at))

On the other hand, the update step for the Q-learning algorithm is the following

Q(st, at) = Q(st, at) + α*(rt + γ*maxa Q(st+1, a) - Q(st, at))

which can also be written as

Q(st, at) = (1 - α) * Q(st, at) + α * (rt + γ*maxa Q(st+1, a))

where γ (gamma) is the discount factor and rt is the reward received from the environment at timestep t.

Is the difference between these two algorithms the fact that SARSA only looks up the next policy value while Q-learning looks up the next maximum policy value?

TLDR (and my own answer)

Thanks to all those answering this question since I first asked it. I've made a github repo playing with Q-Learning and empirically understood what the difference is. It all amounts to how you select your next best action, which from an algorithmic standpoint can be a mean, max or best action depending on how you chose to implement it.

The other main difference is when this selection is happening (e.g., online vs offline) and how/why that affects learning. If you are reading this in 2019 and are more of a hands-on person, playing with a RL toy problem is probably the best way to understand the differences.

One last important note is that both Suton & Barto as well as Wikipedia often have mixed, confusing or wrong formulaic representations with regards to the next state best/max action and reward:

r(t+1)

is in fact

r(t)