I am using Python for market basket analysis. When I am executing this code, it only showing the column name without any result.

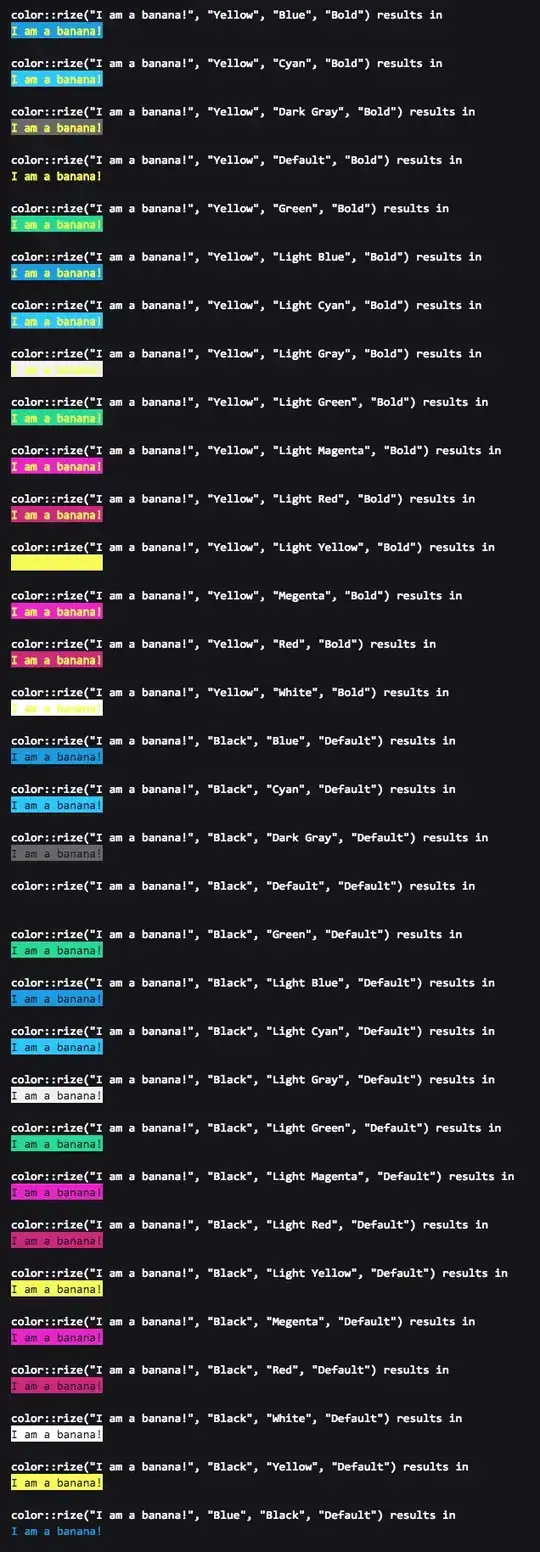

frequent_tr = apriori(data_tr, min_support=0.05)

Here is the dataset Removed

I have adjusted the min_support value but still showing the same result.

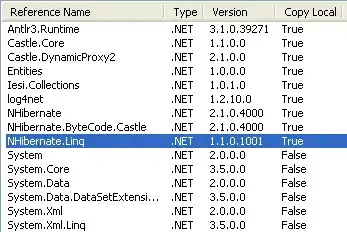

The library that I have used is

import numpy as np

import pandas as pd

from mlxtend.frequent_patterns import apriori

from mlxtend.frequent_patterns import association_rules

from mlxtend.preprocessing import TransactionEncoder

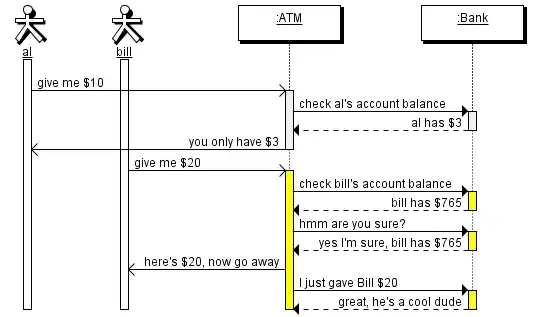

Then the following code is executed.

data = pd.read_csv(_link of the csv location_)

data_tr = data.groupby(['transaction_id', 'service_type']).sum().unstack().reset_index().fillna(0).set_index('transaction_id').droplevel(0,1)

TE = TransactionEncoder()

array = TE.fit(data_tr).transform(data_tr)

data_tr_encoded = pd.DataFrame(array, columns = TE.columns_)

frequent_tr_encoded = apriori(data_tr, min_support=0.05)

The final code result column name only.

I am expecting the final code will print the result like this:

EDIT

I have updated my code to display each of the service_type into column (refer to data_tr code above)