How can I convert an objects position in PyBullet to pixel coordinates & draw a line onto the frame using PyBullet & OpenCV?

We would like to do this because PyBullet native addUserDebugLine() function is not available in DIRECT mode.

import pybullet as p

import numpy as np

import time

import pybullet_data

import cv2

VIDEO_RESOLUTION = (1280, 720)

MY_COLORS = [(255,0,0), (0,255,0), (0,0,255)]

def capture_frame(base_pos=[0,0,0], _cam_dist=3, _cam_yaw=45, _cam_pitch=-45):

_render_width, _render_height = VIDEO_RESOLUTION

view_matrix = p.computeViewMatrixFromYawPitchRoll(

cameraTargetPosition=base_pos,

distance=_cam_dist,

yaw=_cam_yaw,

pitch=_cam_pitch,

roll=0,

upAxisIndex=2)

proj_matrix = p.computeProjectionMatrixFOV(

fov=90, aspect=float(_render_width) / _render_height,

nearVal=0.01, farVal=100.0)

(_, _, px, _, _) = p.getCameraImage(

width=_render_width, height=_render_height, viewMatrix=view_matrix,

projectionMatrix=proj_matrix, renderer=p.ER_TINY_RENDERER) # ER_BULLET_HARDWARE_OPENGL)

rgb_array = np.array(px, dtype=np.uint8)

rgb_array = np.reshape(rgb_array, (_render_height, _render_width, 4))

rgb_array = rgb_array[:, :, :3]

return rgb_array, view_matrix, proj_matrix

def render():

frame, vmat, pmat = capture_frame()

p1, cubeOrn = p.getBasePositionAndOrientation(1)

p2, cubeOrn = p.getBasePositionAndOrientation(2)

frame, view_matrix, proj_matrix = capture_frame()

frame = cv2.resize(frame, VIDEO_RESOLUTION)

points = {}

# reshape matrices

my_order = 'C'

pmat = np.array(proj_matrix).reshape((4,4), order=my_order)

vmat = np.array(view_matrix).reshape((4,4), order=my_order)

fmat = vmat.T @ pmat.T

# compute origin from origin point in simulation

origin = np.array([0,0,0,1])

frame_origin = (fmat @ origin)[:3]*np.array([1280, 640, 0]) + np.array([640, 360, 0])

# define unit vectors

unit_vectors = [ np.array([1,0,0,1]),

np.array([0,1,0,1]),

np.array([0,0,1,1]) ]

for col_id, unit_vector in enumerate(unit_vectors):

cur_point = (fmat @ unit_vector)[:3]*np.array([1280, 640, 0]) + np.array([640, 360, 0])

cv2.line(frame, (640,360), (int(cur_point[0]),int(cur_point[1])), color=MY_COLORS[col_id], thickness=2)

cv2.imwrite("my_rendering.jpg", frame)

print(p1,p2)

if __name__ == '__main__':

physicsClient = p.connect(p.DIRECT)#or p.DIRECT for non-graphical version

p.setAdditionalSearchPath(pybullet_data.getDataPath()) #optionally

p.setGravity(0,0,-10)

planeId = p.loadURDF("plane.urdf")

startPos = [1,0,0.2]

startOrientation = p.getQuaternionFromEuler([0,0,0])

boxId = p.loadURDF("r2d2.urdf",startPos, startOrientation)

startPos = [0,2,0.2]

boxId = p.loadURDF("r2d2.urdf",startPos, startOrientation)

#set the center of mass frame (loadURDF sets base link frame) startPos/Ornp.resetBasePositionAndOrientation(boxId, startPos, startOrientation)

for i in range (2400):

if i == 2399:

render()

p.stepSimulation()

p.disconnect()

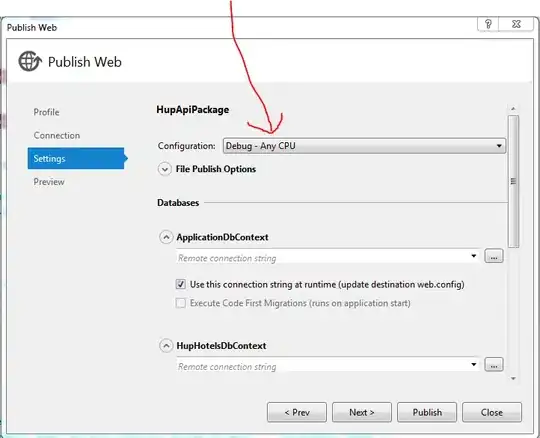

The expected output would be the following frame but with the origin-coordinate frame drawn correctly. E.g. X, Y, and Z axis are colored Red, Blue, and Green respectively.

Since the two R2D2 robots are positioned at [1,0,0] and [0,1,0] respectively, we can see that the coordinate frame is off. (See image below)

We tried the following:

- transposing the matrices

- not transposing the matrices

- changing the order of how we compute fmat e.g. pmat @ vmat instead of vmat @ pmat etc.

Any help is appreciated.