I currently have a RNN model for time series predictions. It uses 3 input features "value", "temperature" and "hour of the day" of the last 96 time steps to predict the next 96 time steps of the feature "value".

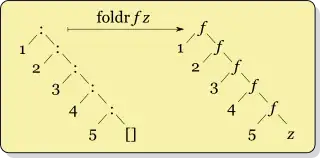

Here you can see a schema of it:

and here you have the current code:

#Import modules

import pandas as pd

import numpy as np

import tensorflow as tf

from sklearn.preprocessing import StandardScaler

from sklearn.metrics import mean_squared_error

from tensorflow import keras

# Define the parameters of the RNN and the training

epochs = 1

batch_size = 50

steps_backwards = 96

steps_forward = 96

split_fraction_trainingData = 0.70

split_fraction_validatinData = 0.90

randomSeedNumber = 50

#Read dataset

df = pd.read_csv('C:/Users/Desktop/TestData.csv', sep=';', header=0, low_memory=False, infer_datetime_format=True, parse_dates={'datetime':[0]}, index_col=['datetime'])

# standardize data

data = df.values

indexWithYLabelsInData = 0

data_X = data[:, 0:3]

data_Y = data[:, indexWithYLabelsInData].reshape(-1, 1)

scaler_standardized_X = StandardScaler()

data_X = scaler_standardized_X.fit_transform(data_X)

data_X = pd.DataFrame(data_X)

scaler_standardized_Y = StandardScaler()

data_Y = scaler_standardized_Y.fit_transform(data_Y)

data_Y = pd.DataFrame(data_Y)

# Prepare the input data for the RNN

series_reshaped_X = np.array([data_X[i:i + (steps_backwards+steps_forward)].copy() for i in range(len(data) - (steps_backwards+steps_forward))])

series_reshaped_Y = np.array([data_Y[i:i + (steps_backwards+steps_forward)].copy() for i in range(len(data) - (steps_backwards+steps_forward))])

timeslot_x_train_end = int(len(series_reshaped_X)* split_fraction_trainingData)

timeslot_x_valid_end = int(len(series_reshaped_X)* split_fraction_validatinData)

X_train = series_reshaped_X[:timeslot_x_train_end, :steps_backwards]

X_valid = series_reshaped_X[timeslot_x_train_end:timeslot_x_valid_end, :steps_backwards]

X_test = series_reshaped_X[timeslot_x_valid_end:, :steps_backwards]

Y_train = series_reshaped_Y[:timeslot_x_train_end, steps_backwards:]

Y_valid = series_reshaped_Y[timeslot_x_train_end:timeslot_x_valid_end, steps_backwards:]

Y_test = series_reshaped_Y[timeslot_x_valid_end:, steps_backwards:]

# Build the model and train it

np.random.seed(randomSeedNumber)

tf.random.set_seed(randomSeedNumber)

model = keras.models.Sequential([

keras.layers.SimpleRNN(10, return_sequences=True, input_shape=[None, 3]),

keras.layers.SimpleRNN(10, return_sequences=True),

keras.layers.TimeDistributed(keras.layers.Dense(1))

])

model.compile(loss="mean_squared_error", optimizer="adam", metrics=['mean_absolute_percentage_error'])

history = model.fit(X_train, Y_train, epochs=epochs, batch_size=batch_size, validation_data=(X_valid, Y_valid))

#Predict the test data

Y_pred = model.predict(X_test)

# Inverse the scaling (traInv: transformation inversed)

data_X_traInv = scaler_standardized_X.inverse_transform(data_X)

data_Y_traInv = scaler_standardized_Y.inverse_transform(data_Y)

series_reshaped_X_notTransformed = np.array([data_X_traInv[i:i + (steps_backwards+steps_forward)].copy() for i in range(len(data) - (steps_backwards+steps_forward))])

X_test_notTranformed = series_reshaped_X_notTransformed[timeslot_x_valid_end:, :steps_backwards]

Y_pred_traInv = scaler_standardized_Y.inverse_transform (Y_pred)

Y_test_traInv = scaler_standardized_Y.inverse_transform (Y_test)

# Calculate errors for every time slot of the multiple predictions

abs_diff = np.abs(Y_pred_traInv - Y_test_traInv)

abs_diff_perPredictedSequence = np.zeros((len (Y_test_traInv)))

average_LoadValue_testData_perPredictedSequence = np.zeros((len (Y_test_traInv)))

abs_diff_perPredictedTimeslot_ForEachSequence = np.zeros((len (Y_test_traInv)))

absoluteError_Load_Ratio_allPredictedSequence = np.zeros((len (Y_test_traInv)))

absoluteError_Load_Ratio_allPredictedTimeslots = np.zeros((len (Y_test_traInv)))

mse_perPredictedSequence = np.zeros((len (Y_test_traInv)))

rmse_perPredictedSequence = np.zeros((len(Y_test_traInv)))

for i in range (0, len(Y_test_traInv)):

for j in range (0, len(Y_test_traInv [0])):

abs_diff_perPredictedSequence [i] = abs_diff_perPredictedSequence [i] + abs_diff [i][j]

mse_perPredictedSequence [i] = mean_squared_error(Y_pred_traInv[i] , Y_test_traInv [i] )

rmse_perPredictedSequence [i] = np.sqrt(mse_perPredictedSequence [i])

abs_diff_perPredictedTimeslot_ForEachSequence [i] = abs_diff_perPredictedSequence [i] / len(Y_test_traInv [0])

average_LoadValue_testData_perPredictedSequence [i] = np.mean (Y_test_traInv [i])

absoluteError_Load_Ratio_allPredictedSequence [i] = abs_diff_perPredictedSequence [i] / average_LoadValue_testData_perPredictedSequence [i]

absoluteError_Load_Ratio_allPredictedTimeslots [i] = abs_diff_perPredictedTimeslot_ForEachSequence [i] / average_LoadValue_testData_perPredictedSequence [i]

rmse_average_allPredictictedSequences = np.mean (rmse_perPredictedSequence)

absoluteAverageError_Load_Ratio_allPredictedSequence = np.mean (absoluteError_Load_Ratio_allPredictedSequence)

absoluteAverageError_Load_Ratio_allPredictedTimeslots = np.mean (absoluteError_Load_Ratio_allPredictedTimeslots)

absoluteAverageError_allPredictedSequences = np.mean (abs_diff_perPredictedSequence)

absoluteAverageError_allPredictedTimeslots = np.mean (abs_diff_perPredictedTimeslot_ForEachSequence)

Here you have some test data Download Test Data

So now I actually would like to include not only past values of the features into the prediction but also future values of the features "temperature" and "hour of the day" into the prediction. The future values of the feature "temperature" can for example be taken from an external weather forecasting service and for the feature "hour of the day" the future values are know before (in the test data I have included a "forecast" of the temperature that is not a real forecast; I just randomly changed the values).

This way, I could assume that - for several applications and data - the forecast could be improved.

In a schema it would look like this:

Can anyone tell me, how I can do that in Keras with a RNN (or LSTM)? One way could be to include the future values as independant features as input. But I would like the model to know that the future values of a feature are connected to the past values of a feature.

Reminder: Does anybody have an idea how to do this? I'll highly appreciate every comment.