I am trying to build a model to classify signals based on whether or not that signal has a specific pattern in it.

My signals are just arrays of 10000 floats each. I have 500 arrays that contain the pattern, and 500 that don't.

This is how I split my dataset :

X_train => Array of signals | shape => (800, 10000)

Y_train => Array of 1s and 0s | shape => (800,)

X_test => Array of signals | shape => (200, 10000)

Y_test => Array of 1s and 0s | shape => (200,)

(X for training, Y for validating)

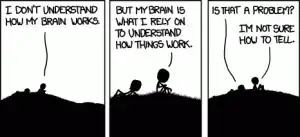

The pattern is just a quick increase in values followed by a quick decrease in values like so (highlighted in red) :

Here is a signal without the pattern for reference :

I'm having a lot of trouble building a model since I'm used to classifying images (so 2D or 3D) but not just a series of points.

I've tried a simple sequential model like so :

# create model

model = Sequential()

model.add(Dense(10000, input_dim=10000, activation='relu'))

model.add(Dense(64, activation='relu'))

model.add(Dense(64, activation='relu'))

model.add(Dense(1, activation='sigmoid'))

# Compile model

model.compile(loss='binary_crossentropy', optimizer='adam', metrics=['accuracy'])

But it's failing completely. I'd love to implement a CNN but I have no idea how to do so. When re-using some CNNs I used for image classification, I'm getting a lot of errors concerning input, which I think is because its a 1D signal instead of a 2D or 3D image.

This is what happens with a CNN I used for image classification in the past :

model_random = tf.keras.models.Sequential()

model_random = tf.keras.Sequential([

tf.keras.layers.Conv2D(32, [3,3], activation='relu'),

tf.keras.layers.MaxPooling2D(),

tf.keras.layers.Conv2D(16, [3,3], activation='relu'),

tf.keras.layers.MaxPooling2D(),

tf.keras.layers.Conv2D(8, [3,3], activation='relu'),

tf.keras.layers.MaxPooling2D(),

tf.keras.layers.Flatten(),

tf.keras.layers.Dense(128, activation='relu'),

tf.keras.layers.Dense(1)

])

model_random.compile(optimizer = 'adam',

loss = tf.keras.losses.SparseCategoricalCrossentropy(from_logits=True),

metrics=['accuracy'])

model_random.fit(X_train,Y_train, epochs=30)

ValueError: Exception encountered when calling layer "sequential_8" (type Sequential).

Input 0 of layer "conv2d_6" is incompatible with the layer: expected min_ndim=4, found ndim=2. Full shape received: (32, 10000)

Call arguments received:

• inputs=tf.Tensor(shape=(32, 10000), dtype=float32)

• training=True

• mask=None