I'm trying to capture a single image from H.264 video streaming in my Raspberry Pi. The streaming is using raspivid with websocket. But, cannot show a correct image in imshow(). I also tried to set the .reshape(), but got ValueError: cannot reshape array of size 3607 into shape (480,640,3)

In client side, I successfully connect to the video streaming and get incoming bytes. The server is using raspivid-broadcaster for video streaming. I guess the first byte can be decoded to image? So, I do the following code.

async def get_image_from_h264_streaming():

uri = "ws://127.0.0.1:8080"

async with websockets.connect(uri) as websocket:

frame = json.loads(await websocket.recv())

print(frame)

width, height = frame["width"], frame["height"]

response = await websocket.recv()

print(response)

# transform the byte read into a numpy array

in_frame = (

numpy

.frombuffer(response, numpy.uint8)

# .reshape([height, width, 3])

)

# #Display the frame

cv2.imshow('in_frame', in_frame)

cv2.waitKey(0)

asyncio.get_event_loop().run_until_complete(get_image_from_h264_streaming())

print(frame) shows

{'action': 'init', 'width': 640, 'height': 480}

print(response) shows

b"\x00\x00\x00\x01'B\x80(\x95\xa0(\x0fh\x0..............xfc\x9f\xff\xf9?\xff\xf2\x7f\xff\xe4\x80"

Any suggestions?

---------------------------------- EDIT ----------------------------------

Thanks for this suggestion. Here is my updated code.

def decode(raw_bytes: bytes):

code_ctx = av.CodecContext.create("h264", "r")

packets = code_ctx.parse(raw_bytes)

for i, packet in enumerate(packets):

frames = code_ctx.decode(packet)

if frames:

return frames[0].to_ndarray()

async def save_img():

async with websockets.connect("ws://127.0.0.1:8080") as websocket:

image_init = await websocket.recv()

count = 0

combined = b''

while count < 3:

response = await websocket.recv()

combined += response

count += 1

frame = decode(combined)

print(frame)

cv2.imwrite('test.jpg', frame)

asyncio.get_event_loop().run_until_complete(save_img())

print(frame) shows

[[109 109 109 ... 115 97 236]

[109 109 109 ... 115 97 236]

[108 108 108 ... 115 97 236]

...

[111 111 111 ... 101 103 107]

[110 110 110 ... 101 103 107]

[112 112 112 ... 104 106 110]]

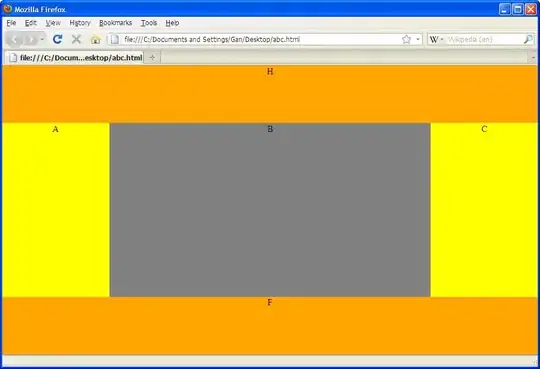

Below is the saved image I get. It has the wrong size of 740(height)x640(width). The correct one is 480(height) x 640(width). And, not sure why the image is grayscale instead of color one.

---------------------------------- EDIT 2 ----------------------------------

Below is the main method to send data in raspivid.

raspivid - index.js

const {port, ...raspividOptions} = {...options, profile: 'baseline', timeout: 0};

videoStream = raspivid(raspividOptions)

.pipe(new Splitter(NALSeparator))

.pipe(new stream.Transform({

transform: function (chunk, _encoding, callback){

...

callback();

}

}));

videoStream.on('data', (data) => {

wsServer.clients.forEach((socket) => {

socket.send(data, {binary: true});

});

});

stream-split - index.js (A line of code shows the max. size is 1Mb)

class Splitter extends Transform {

constructor(separator, options) {

...

this.bufferSize = options.bufferSize || 1024 * 1024 * 1 ; //1Mb

...

}

_transform(chunk, encoding, next) {

if (this.offset + chunk.length > this.bufferSize - this.bufferFlush) {

var minimalLength = this.bufferSize - this.bodyOffset + chunk.length;

if(this.bufferSize < minimalLength) {

//console.warn("Increasing buffer size to ", minimalLength);

this.bufferSize = minimalLength;

}

var tmp = new Buffer(this.bufferSize);

this.buffer.copy(tmp, 0, this.bodyOffset);

this.buffer = tmp;

this.offset = this.offset - this.bodyOffset;

this.bodyOffset = 0;

}

...

}

};

----------Completed Answer (Thanks Ann and Christoph for the direction)----------

Please see in answer section.