I need to detect which spheres are connected to each other. If we have:

radii = np.array([2, 1, 1, 2, 2, 0.5])

poss = np.array([[7, 7, 7], [7.5, 8.5, 6], [0, 0, 0], [-1, -2, -1], [1, 1, 1], [2, 1, 3]])

I want a Boolean array (shape = (number of groups, number of spheres)) or array/lists of arrays/lists of indices that shows which of the spheres are connected. So, the expected results for this example must be something like:

Boolean_array = np.array([[1, 1, 0, 0, 0, 0], [0, 0, 1, 1, 1, 1]], dtype=bool)

object_array = np.array([[0, 1], [2, 3, 4, 5]])

I tried to find a solution with networkx (I'm not very familiar with it) and IDK if this library can help where we have spheres with different radii. I guess, returned ends_ind in my previous code can be helpful in this regard and I tried to use that as:

G = nx.Graph([*ends_ind])

L = [nx.node_connected_component(G, 0)]

for i in range(len(radii)):

iter = 0

for j in L:

if i in j:

iter += 1

if iter == 0:

L.append(nx.node_connected_component(G, i))

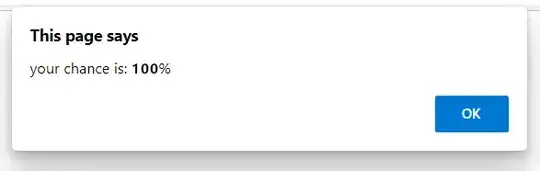

Which will not work. The error:

Traceback (most recent call last):

File "C:/Users/Ali/Desktop/check_2.py", line 31, in <module>

L.append(nx.node_connected_component(G, i))

File "<class 'networkx.utils.decorators.argmap'> compilation 8", line 4, in argmap_node_connected_component_5

File "C:\Users\Ali\anaconda3\envs\PFC_FiPy\lib\site-packages\networkx\algorithms\components\connected.py", line 185, in node_connected_component

return _plain_bfs(G, n)

File "C:\Users\Ali\anaconda3\envs\PFC_FiPy\lib\site-packages\networkx\algorithms\components\connected.py", line 199, in _plain_bfs

nextlevel.update(G_adj[v])

File "C:\Users\Ali\anaconda3\envs\PFC_FiPy\lib\site-packages\networkx\classes\coreviews.py", line 82, in __getitem__

return AtlasView(self._atlas[name])

KeyError: 11

Since using my previous code with other libraries will be an inefficient code (if it can solve the issue), I am seeking for any libraries, e.g. networkx, or methods that can do it in a more efficient way, if possible.

What is the best way to get my expected results, particularly for large number of spheres (~100000).