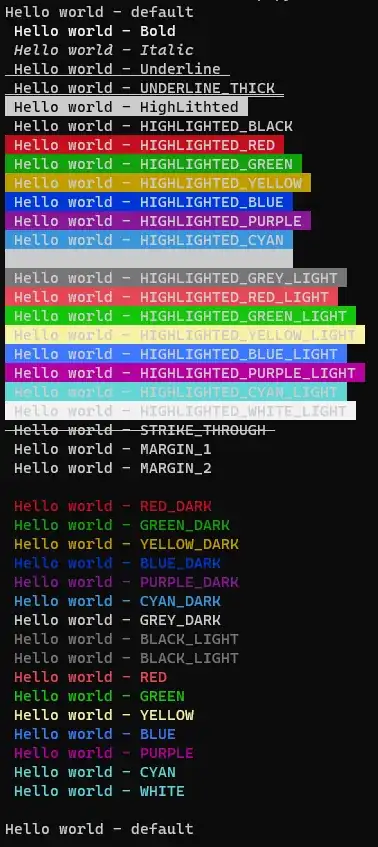

I am working on a spatial search case for spheres in which I want to find connected spheres. For this aim, I searched around each sphere for spheres that centers are in a (maximum sphere diameter) distance from the searching sphere’s center. At first, I tried to use scipy related methods to do so, but scipy method takes longer times comparing to equivalent numpy method. For scipy, I have determined the number of K-nearest spheres firstly and then find them by cKDTree.query, which lead to more time consumption. However, it is slower than numpy method even by omitting the first step with a constant value (it is not good to omit the first step in this case). It is contrary to my expectations about scipy spatial searching speed. So, I tried to use some list-loops instead some numpy lines for speeding up using numba prange. Numba run the code a little faster, but I believe that this code can be optimized for better performances, perhaps by vectorization, using other alternative numpy modules or using numba in another way. I have used iteration on all spheres due to prevent probable memory leaks and …, where number of spheres are high.

import numpy as np

import numba as nb

from scipy.spatial import cKDTree, distance

# ---------------------------- input data ----------------------------

""" For testing by prepared files:

radii = np.load('a.npy') # shape: (n-spheres, ) must be loaded by np.load('a.npy') or np.loadtxt('radii_large.csv')

poss = np.load('b.npy') # shape: (n-spheres, 3) must be loaded by np.load('b.npy') or np.loadtxt('pos_large.csv', delimiter=',')

"""

rnd = np.random.RandomState(70)

data_volume = 200000

radii = rnd.uniform(0.0005, 0.122, data_volume)

dia_max = 2 * radii.max()

x = rnd.uniform(-1.02, 1.02, (data_volume, 1))

y = rnd.uniform(-3.52, 3.52, (data_volume, 1))

z = rnd.uniform(-1.02, -0.575, (data_volume, 1))

poss = np.hstack((x, y, z))

# --------------------------------------------------------------------

# @nb.jit('float64[:,::1](float64[:,::1], float64[::1])', forceobj=True, parallel=True)

def ends_gap(poss, dia_max):

particle_corsp_overlaps = np.array([], dtype=np.float64)

ends_ind = np.empty([1, 2], dtype=np.int64)

""" using list looping """

# particle_corsp_overlaps = []

# ends_ind = []

# for particle_idx in nb.prange(len(poss)): # by list looping

for particle_idx in range(len(poss)):

unshared_idx = np.delete(np.arange(len(poss)), particle_idx) # <--- relatively high time consumer

poss_without = poss[unshared_idx]

""" # SCIPY method ---------------------------------------------------------------------------------------------

nears_i_ind = cKDTree(poss_without).query_ball_point(poss[particle_idx], r=dia_max) # <--- high time consumer

if len(nears_i_ind) > 0:

dist_i, dist_i_ind = cKDTree(poss_without[nears_i_ind]).query(poss[particle_idx], k=len(nears_i_ind)) # <--- high time consumer

if not isinstance(dist_i, float):

dist_i[dist_i_ind] = dist_i.copy()

""" # NUMPY method --------------------------------------------------------------------------------------------

lx_limit_idx = poss_without[:, 0] <= poss[particle_idx][0] + dia_max

ux_limit_idx = poss_without[:, 0] >= poss[particle_idx][0] - dia_max

ly_limit_idx = poss_without[:, 1] <= poss[particle_idx][1] + dia_max

uy_limit_idx = poss_without[:, 1] >= poss[particle_idx][1] - dia_max

lz_limit_idx = poss_without[:, 2] <= poss[particle_idx][2] + dia_max

uz_limit_idx = poss_without[:, 2] >= poss[particle_idx][2] - dia_max

nears_i_ind = np.where(lx_limit_idx & ux_limit_idx & ly_limit_idx & uy_limit_idx & lz_limit_idx & uz_limit_idx)[0]

if len(nears_i_ind) > 0:

dist_i = distance.cdist(poss_without[nears_i_ind], poss[particle_idx][None, :]).squeeze() # <--- relatively high time consumer

# """ # -------------------------------------------------------------------------------------------------------

contact_check = dist_i - (radii[unshared_idx][nears_i_ind] + radii[particle_idx])

connected = contact_check[contact_check <= 0]

particle_corsp_overlaps = np.concatenate((particle_corsp_overlaps, connected))

""" using list looping """

# if len(connected) > 0:

# for value_ in connected:

# particle_corsp_overlaps.append(value_)

contacts_ind = np.where([contact_check <= 0])[1]

contacts_sec_ind = np.array(nears_i_ind)[contacts_ind]

sphere_olps_ind = np.where((poss[:, None] == poss_without[contacts_sec_ind][None, :]).all(axis=2))[0] # <--- high time consumer

ends_ind_mod_temp = np.array([np.repeat(particle_idx, len(sphere_olps_ind)), sphere_olps_ind], dtype=np.int64).T

if particle_idx > 0:

ends_ind = np.concatenate((ends_ind, ends_ind_mod_temp))

else:

ends_ind[0, 0], ends_ind[0, 1] = ends_ind_mod_temp[0, 0], ends_ind_mod_temp[0, 1]

""" using list looping """

# for contacted_idx in sphere_olps_ind:

# ends_ind.append([particle_idx, contacted_idx])

# ends_ind_org = np.array(ends_ind) # using lists

ends_ind_org = ends_ind

ends_ind, ends_ind_idx = np.unique(np.sort(ends_ind_org), axis=0, return_index=True) # <--- relatively high time consumer

gap = np.array(particle_corsp_overlaps)[ends_ind_idx]

return gap, ends_ind, ends_ind_idx, ends_ind_org

In one of my tests on 23000 spheres, scipy, numpy, and numba-aided methods finished the loop in about 400, 200, and 180 seconds correspondingly using Colab TPU; for 500.000 spheres it take 3.5 hours. These execution times are not satisfying at all for my project, where number of spheres may be up to 1.000.000 in a medium data volume. I will call this code many times in my main code and seeking for ways that could perform this code in milliseconds (as much as fastest that it could). Is it possible?? I would be appreciated if anyone would speed up the code as it is needed.

Notes:

- This code must be executable with python 3.7+, on CPU and GPU.

- This code must be applicable for data size, at least, 300.000 spheres.

- All numpy, scipy, and … equivalent modules instead of my written modules, which make my code faster significantly, will be upvoted.

I would be appreciated for any recommendations or explanations about:

- Which method could be faster in this subject?

- Why scipy is not faster than other methods in this case and where it could be helpful relating to this subject?

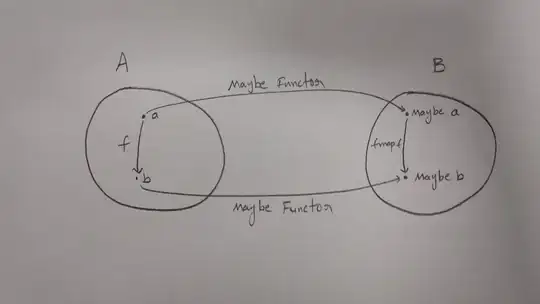

- Choosing between iterator methods and matrix form methods is a confusing matter for me. Iterating methods use less memory and could be used and tuned up by numba and … but, I think, are not useful and comparable with matrix methods (which depends on memory limits) like numpy and … for huge sphere numbers. For this case, perhaps I could omit the iteration by numpy, but I guess strongly that it cannot be handled due to huge matrix size operations and memory leaks.

Prepared sample test data:

Poss data: 23000, 500000

Radii data: 23000, 500000

Line by line speed test logs: for two test cases scipy method and numpy time consumption.