I was able to create YARN containers for my spark jobs. I have come across various blogs and youtube videos to efficiently use --executors-cores (use values from 4 -6 for efficient throughput) and --executor memory after reserving 1 CPU cores and 1GB RAM for hadoop deamons and determined the right values for each executor.

I also came across articles like these.

I am checking how many containers are created by YARN from spark shell and i am not able to understand how the containers are allocated.

For example i have created EMR cluster with 1 master node m5.xlarge (4 vcore , 16 Gib) and 1 core node with instance type c5.2xlarge ( 8 vcore and 16 Gib RAM)

When i create the spark shell with the following command spark-shell --num-executors=6 --executor-cores=5 --conf spark.executor.memoryOverhead=1G --executor-memory 1G --driver-memory 1G

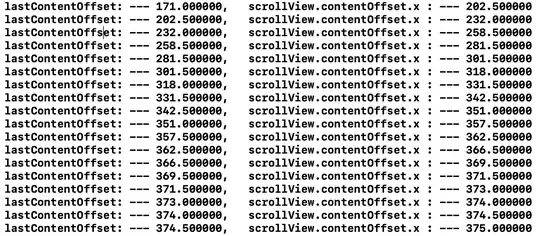

i see that 6 executors including a driver are being created with 5 cores for each executor for a total of 25 cores

However the metrics from hadoop history server does not reflect the right calculations

I am very confused how in spark UI , more cores than available were allocated for each executor . The total vcores in the cluster is 8 cores considering the core nodes but a total of 25 executors are allocated for the executors.

Can someone please explain what i am missing.