I am following this course to learn computer graphics and write my first ray tracer.

I already have some visible results, but they seem to be too large.

The overall algorithm the course outlines is this:

Image Raytrace (Camera cam, Scene scene, int width, int height)

{

Image image = new Image (width, height) ;

for (int i = 0 ; i < height ; i++)

for (int j = 0 ; j < width ; j++) {

Ray ray = RayThruPixel (cam, i, j) ;

Intersection hit = Intersect (ray, scene) ;

image[i][j] = FindColor (hit) ;

}

return image ;

}

I perform all calculations in camera space (where the camera is at (0, 0, 0)). Thus RayThruPixel returns me a ray in camera coordinates, Intersect returns an intersection point also in camera coordinates, and the image pixel array is a direct mapping from the intersectionr results.

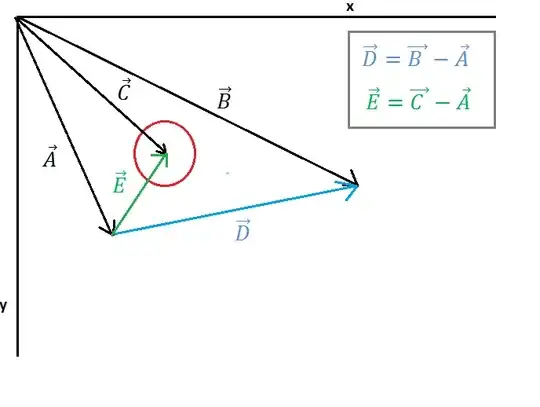

The below image is the rendering of a sphere at (0, 0, -40000) world coordinates and radius 0.15, and camera at (0, 0, 2) world coordinates looking towards (0, 0, 0) world coordinates. I would normally expect the sphere to be a lot smaller given its small radius and far away Z coordinate.

The same thing happens with rendering triangles too. In the below image I have 2 triangles that form a square, but it's way too zoomed in. The triangles have coordinates between -1 and 1, and the camera is looking from world coordinates (0, 0, 4).

This is what the square is expected to look like:

Here is the code snippet I use to determine the collision with the sphere. I'm not sure if I should divide the radius by the z coordinate here - without it, the circle is even larger:

Sphere* sphere = dynamic_cast<Sphere*>(object);

float t;

vec3 p0 = ray->origin;

vec3 p1 = ray->direction;

float a = glm::dot(p1, p1);

vec3 center2 = vec3(modelview * object->transform * glm::vec4(sphere->center, 1.0f)); // camera coords

float b = 2 * glm::dot(p1, (p0 - center2));

float radius = sphere->radius / center2.z;

float c = glm::dot((p0 - center2), (p0 - center2)) - radius * radius;

float D = b * b - 4 * a * c;

if (D > 0) {

// two roots

float sqrtD = glm::sqrt(D);

float root1 = (-b + sqrtD) / (2 * a);

float root2 = (-b - sqrtD) / (2 * a);

if (root1 > 0 && root2 > 0) {

t = glm::min(root1, root2);

found = true;

}

else if (root2 < 0 && root1 >= 0) {

t = root1;

found = true;

}

else {

// should not happen, implies sthat both roots are negative

}

}

else if (D == 0) {

// one root

float root = -b / (2 * a);

t = root;

found = true;

}

else if (D < 0) {

// no roots

// continue;

}

if (found) {

hitVector = p0 + p1 * t;

hitNormal = glm::normalize(result->hitVector - center2);

}

Here I generate the ray going through the relevant pixel:

Ray* RayThruPixel(Camera* camera, int x, int y) {

const vec3 a = eye - center;

const vec3 b = up;

const vec3 w = glm::normalize(a);

const vec3 u = glm::normalize(glm::cross(b, w));

const vec3 v = glm::cross(w, u);

const float aspect = ((float)width) / height;

float fovyrad = glm::radians(camera->fovy);

const float fovx = 2 * atan(tan(fovyrad * 0.5) * aspect);

const float alpha = tan(fovx * 0.5) * (x - (width * 0.5)) / (width * 0.5);

const float beta = tan(fovyrad * 0.5) * ((height * 0.5) - y) / (height * 0.5);

return new Ray(/* origin= */ vec3(modelview * vec4(eye, 1.0f)), /* direction= */ glm::normalize(vec3( modelview * glm::normalize(vec4(alpha * u + beta * v - w, 1.0f)))));

}

And intersection with a triangle:

Triangle* triangle = dynamic_cast<Triangle*>(object);

// vertices in camera coords

vec3 vertex1 = vec3(modelview * object->transform * vec4(*vertices[triangle->index1], 1.0f));

vec3 vertex2 = vec3(modelview * object->transform * vec4(*vertices[triangle->index2], 1.0f));

vec3 vertex3 = vec3(modelview * object->transform * vec4(*vertices[triangle->index3], 1.0f));

vec3 N = glm::normalize(glm::cross(vertex2 - vertex1, vertex3 - vertex1));

float D = -glm::dot(N, vertex1);

float m = glm::dot(N, ray->direction);

if (m == 0) {

// no intersection because ray parallel to plane

}

else {

float t = -(glm::dot(N, ray->origin) + D) / m;

if (t < 0) {

// no intersection because ray goes away from triange plane

}

vec3 Phit = ray->origin + t * ray->direction;

vec3 edge1 = vertex2 - vertex1;

vec3 edge2 = vertex3 - vertex2;

vec3 edge3 = vertex1 - vertex3;

vec3 c1 = Phit - vertex1;

vec3 c2 = Phit - vertex2;

vec3 c3 = Phit - vertex3;

if (glm::dot(N, glm::cross(edge1, c1)) > 0

&& glm::dot(N, glm::cross(edge2, c2)) > 0

&& glm::dot(N, glm::cross(edge3, c3)) > 0) {

found = true;

hitVector = Phit;

hitNormal = N;

}

}

Given that the output image is a circle, and that the same problem happens with triangles as well, my guess is the problem isn't from the intersection logic itself, but rather something wrong with the coordinate spaces or transformations. Could calculating everything in camera space be causing this?