I am trying to run a random forest model on my Macbook Air M2 (base model)

This is the code I was trying to execute.

# TEST

library(dplyr) # for data manipulation

library(caret) # for machine learning

# Select relevant columns for analysis

selected_cols <- c("Year", "Month", "DayofMonth", "DayOfWeek", "DepTime", "CRSDepTime", "ArrTime", "CRSArrTime", "UniqueCarrier", "FlightNum", "Origin", "Dest", "Distance", "Cancelled", "CancellationCode")

flight_data <- Flight_Details_2003 %>% select(selected_cols)

# Create a delay variable by subtracting the scheduled departure time from the actual departure time. We will also filter out cancelled flights and negative delays

flight_data <- flight_data %>% mutate(DepDelay = DepTime - CRSDepTime) %>%

filter(Cancelled == 0, DepDelay >= 0)

# create a new fata frame with the delay information by airport and date:

airport_data <- flight_data %>% group_by(Year, Month, DayofMonth, Origin) %>%

summarize(TotalDelay = sum(DepDelay))

# Merge the delay data with itself to create a delay-by-airport-by-date matrix:

delay_matrix <- merge(airport_data, airport_data, by = c("Year", "Month", "DayofMonth"))

# Create a new column that indicates if a delay occured in the destination airport due to a delay in the origin airport

delay_matrix <- delay_matrix %>% mutate(CascadingDelay = ifelse(TotalDelay.x > 0 & TotalDelay.y == 0, 1, 0))

# Prepare data for machine learning

# Drop unnecessary columns

delay_matrix <- delay_matrix %>% select(-c("TotalDelay.x", "TotalDelay.y"))

# Convert data to binary format

delay_matrix$CascadingDelay <- factor(delay_matrix$CascadingDelay, levels = c(0,1), labels = c("No", "Yes"))

# Split data into training and testing sets

set.seed(123)

train_index <- createDataPartition(delay_matrix$CascadingDelay, p = 0.7, list = FALSE)

train_data <- delay_matrix[train_index,]

test_data <- delay_matrix[-train_index,]

# Randomly sampling a smaller portion of the training data because of this error: vector memory exhausted (limit reached?)

set.seed(123)

sample_size <- 10000

train_data_sample <- train_data[sample(seq_len(nrow(train_data)), size = sample_size), ]

# train the machine learning model using a random forest algorithm:

# Train the model

model <- train(CascadingDelay ~ ., data = train_data, method = "rf", trControl = trainControl(method = "cv", number = 10))

# Check model accuracy

confusionMatrix(model, test_data$CascadingDelay)

I have a very large data I am running it on. These are the information I can get without exploding this whole screen.

> str(flight_data)

'data.frame': 3109403 obs. of 16 variables:

$ Year : int 2003 2003 2003 2003 2003 2003 2003 2003 2003 2003 ...

$ Month : int 1 1 1 1 1 1 1 1 1 1 ...

$ DayofMonth : int 31 2 5 1 4 5 6 7 13 16 ...

$ DayOfWeek : int 5 4 7 3 6 7 1 2 1 4 ...

$ DepTime : int 1724 1053 1035 1713 1710 1832 1710 1712 1714 1711 ...

$ CRSDepTime : int 1655 1035 1035 1710 1710 1710 1710 1710 1710 1710 ...

$ ArrTime : int 1936 1726 1636 1851 1835 1951 1843 1839 1835 1837 ...

$ CRSArrTime : int 1913 1634 1634 1847 1847 1847 1847 1847 1847 1847 ...

$ UniqueCarrier : chr "UA" "UA" "UA" "UA" ...

$ FlightNum : int 1017 1018 1018 1020 1020 1020 1020 1020 1020 1020 ...

$ Origin : chr "ORD" "OAK" "OAK" "IAD" ...

$ Dest : chr "MSY" "ORD" "ORD" "BOS" ...

$ Distance : int 837 1835 1835 413 413 413 413 413 413 413 ...

$ Cancelled : int 0 0 0 0 0 0 0 0 0 0 ...

$ CancellationCode: chr NA NA NA NA ...

$ DepDelay : int 69 18 0 3 0 122 0 2 4 1 ...

After running the codes, I will be asked to restart R.

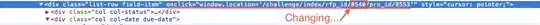

It started off as an error: vector memory exhausted (limit reached?)

and I saw some other thread on stackoverflow (R on MacOS Error: vector memory exhausted (limit reached?)) stating that I can do something with my terminal to increase the physical and virtual memory. So I did and now it just crashes instead of showing the error.