Here is another option, it is based on ranking by price within each group (brand) formula 1:

=LET(in,A2:C10,A,TAKE(in,,1),C,TAKE(in,,-1),

r, MAP(A,C,LAMBDA(x,y,SUM((A=x)*(C<y))+1)),FILTER(in,r=1))

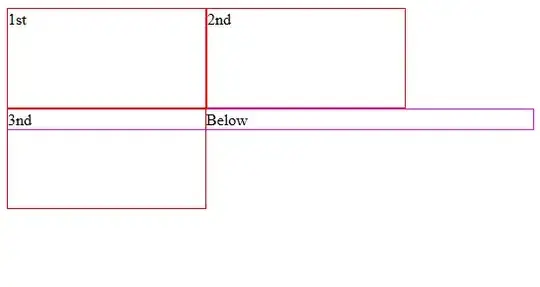

Here is the output:

Note: I changed intentionally the price of

Note: I changed intentionally the price of Ford Expeditor to the same lowest price of Mazda and also interchanged Mazda3 with Tundra for testing purposes, to make sure the order by the brand is not relevant and that it ranks by group properly.

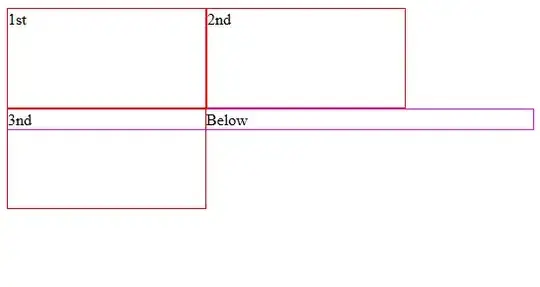

For illustrative purposes, here is the output of r (rank), so we can see how it works:

The good thing about this approach is that with minimal changes, you can change the filter criteria. For example to get the two lowest prices by brand, just filter by 2.

If you want to rank by maximum price instead, then change (C < y) with (C > y), so it is additional flexibility this approach has.

Performance

See comments on VBasic2008 and Tom Sharpe's answers. The above solution (formula 1) for a large dataset of around 10K rows, shows lower performance (54secs) compared to JvdV and Tom solutions (fraction of a second). Being JvdV the fastest one. I didn't consider VBasic2008's solution because we know from other questions/answers that REDUCE/VSTACK has a significant time consumption. Then I found the following approach (formula 2):

=LET(in,A2:C10,A,TAKE(in,,1),C,TAKE(in,,-1), ux, UNIQUE(A),m,

MINIFS(C,A,ux),FILTER(in,1-ISNA(XMATCH(A&"_"&C,ux&"_"&m))))

which is easy to understand and very fast. Concatenation may produce false positives, which is why it is a good practice to use a delimiter (_) that is not part of the dataset character set. Check JvdV answer (comment section) to this question: Finding pairs of cells in two columns.

Later I found it is possible to avoid concatenation as follows with a similar performance result as JvdV's solution (but not better than the XMATCH approach) formula 3:

=LET(in,A2:C10,A,TAKE(in,,1),C,TAKE(in,,-1),ux,UNIQUE(A),m,MINIFS(C,A,ux),

FILTER(in,MMULT(N(A=TOROW(ux))*N(C=TOROW(m)),SEQUENCE(ROWS(m),,,0))=1))

Note: If you look carefully at this approach it ends up in JvdV's approach, but using MMULT instead of BYROW, which may explain why both have a similar performance. I just added the comparison =1, but it is not really necessary.

Here testing result I did for 100K rows:

formula 2 (XMATCH) |

JvdV (BYROW) |

formula 3 (MMULT) |

0.24secs |

0.54secs |

0.53secs |

Worth to mention that even using BYROW which is a LAMBDA function, that usually is more time-consuming, it is about double, but anyway really fast too.