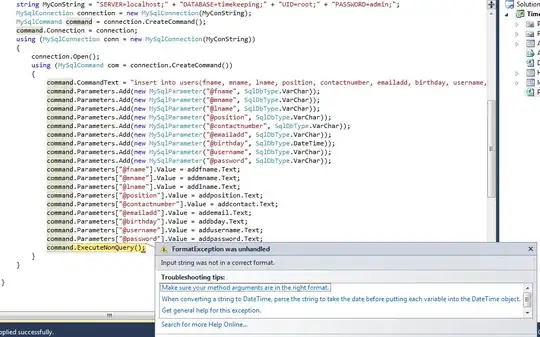

In my C# application, I have a 1280x720 image (in blue on the image below). In the image is an object of interest (in yellow) whose center was originally at (objectCenterX, objectCenterY) and which was originally tilted at an angle (objectAngle in degrees).

The image was rotated and translated to straighten the object and bring it to the center of the frame (in red; also 1280x720) causing black regions to appear.

Now I am trying to figure out by how much (minimum) should I uniformly scale up (zoom in) the image around the object's center to get rid of the black regions (in other words, zoom to fill the red frame).

EDIT: I am interested in the computation of the required scale factor.