In Python, how big can a list get? I need a list of about 12000 elements. Will I still be able to run list methods such as sorting, etc?

10 Answers

According to the source code, the maximum size of a list is PY_SSIZE_T_MAX/sizeof(PyObject*).

PY_SSIZE_T_MAX is defined in pyport.h to be ((size_t) -1)>>1

On a regular 32bit system, this is (4294967295 / 2) / 4 or 536870912.

Therefore the maximum size of a python list on a 32 bit system is 536,870,912 elements.

As long as the number of elements you have is equal or below this, all list functions should operate correctly.

-

5Why is `sizeof(PyObject*) == 4?`? What does this represent? – Matt Dec 01 '15 at 16:05

-

4@Matt, is the number of bytes of a single `PyObject *`. That thing is a so called pointer(you recognize them because of the asterix at the end) . Pointers are 4 bytes long and store a memory address to the allocated object. They are "only" 4 bytes long because with 4 bytes you can address every element in a memory of nowadays computers. – Antonio Ragagnin Dec 02 '15 at 16:17

-

1It's worth noting (as Álvaro Justen's answer indicates) that on other machines, notably those running 64-bit systems, the value of `PY_SSIZE_T_MAX` can very greatly. – ClydeTheGhost Aug 08 '16 at 18:46

-

1@ClydeTheGhost, could you specify whether those running 64-bit systems also can have a lower maximum size than the 536,870,912 elements? Or that they can vary greatly, yet always have a maximum size that is equal to- or larger than 536,870,912 elements? – a.t. Jun 16 '19 at 17:53

-

1@a.t. The maximum for a 64-bit system will always be equal or larger than for a 32-bit system. – ClydeTheGhost Jun 21 '19 at 20:04

-

It’s (2^31-1)/4 for 32-bit systems but (2^63-1)/8 for 64-bit systems. (Roughly a half billion on 32-bit and roughly a billion billions on 64-bit) – thorr18 Sep 04 '20 at 20:58

As the Python documentation says:

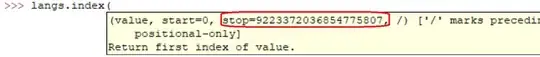

sys.maxsize

The largest positive integer supported by the platform’s Py_ssize_t type, and thus the maximum size lists, strings, dicts, and many other containers can have.

In my computer (Linux x86_64):

>>> import sys

>>> print sys.maxsize

9223372036854775807

- 1,943

- 1

- 17

- 17

-

-

15@ldgorman,`sys.maxsize` is the answer to the question. Different architectures support different maxima. – Simon Kuang Feb 02 '15 at 04:39

-

1Does the value returned by sys.maxsize reflect the amount of available RAM in the computer in any way? – GeoJohn Mar 23 '15 at 20:11

-

39223372036854775807 elements? Really? This varies greatly from the most upvoted answer as well. – akki Dec 02 '16 at 15:01

-

15@akki the accepted answer is referring to a 32 bit system. Since it is 2016, I will assume you are on a 64 bit system and the answer is therefore correct – Brian Leach Jan 04 '17 at 22:06

-

5

-

6Sys.maxsize should give you 2^31 - 1 on a 32-bit platform and 2^63 - 1 on a 64-bit platform. (2147483647 or 9223372036854775807, respectively) However, because each pointer on the 32 bit list takes up 4 bytes or on a 64 bit it's 8 bytes, Python would give you an error if you attempt to make a list larger than maxsize/8 on a 64-bit system or maxsize/4 on a 32-bit system. – thorr18 Sep 04 '20 at 20:51

Sure it is OK. Actually you can see for yourself easily:

l = range(12000)

l = sorted(l, reverse=True)

Running the those lines on my machine took:

real 0m0.036s

user 0m0.024s

sys 0m0.004s

But sure as everyone else said. The larger the array the slower the operations will be.

- 111,714

- 37

- 173

- 152

-

21Timing this way can be misleading -- most of the time is spent starting up the Python interpreter. A better way is: python -m timeit.py "l=range(12000); l=sorted(l, reverse=True)". On my machine this gives about 1/20th of the time for this example. – dF. May 12 '09 at 23:29

-

5@dF, You are right about accuracy. Thanks for noting that. I just wanted to prove a point. And the example proves it. – Nadia Alramli May 12 '09 at 23:34

-

14@dF: Awesome! 0.024s was much too long for me and I'm glad I can stop worrying about that now. – Thomas Edleson Feb 19 '11 at 17:50

In casual code I've created lists with millions of elements. I believe that Python's implementation of lists are only bound by the amount of memory on your system.

In addition, the list methods / functions should continue to work despite the size of the list.

If you care about performance, it might be worthwhile to look into a library such as NumPy.

- 21,988

- 13

- 81

- 109

- 1,053

- 1

- 10

- 16

It varies for different systems (depends on RAM). The easiest way to find out is

import six six.MAXSIZE 9223372036854775807

This gives the max size of list and dict too ,as per the documentation

- 21,988

- 13

- 81

- 109

- 2,445

- 1

- 14

- 12

12000 elements is nothing in Python... and actually the number of elements can go as far as the Python interpreter has memory on your system.

- 11,479

- 5

- 49

- 73

Performance characteristics for lists are described on Effbot.

Python lists are actually implemented as vector for fast random access, so the container will basically hold as many items as there is space for in memory. (You need space for pointers contained in the list as well as space in memory for the object(s) being pointed to.)

Appending is O(1) (amortized constant complexity), however, inserting into/deleting from the middle of the sequence will require an O(n) (linear complexity) reordering, which will get slower as the number of elements in your list.

Your sorting question is more nuanced, since the comparison operation can take an unbounded amount of time. If you're performing really slow comparisons, it will take a long time, though it's no fault of Python's list data type.

Reversal just takes the amount of time it required to swap all the pointers in the list (necessarily O(n) (linear complexity), since you touch each pointer once).

- 21,988

- 13

- 81

- 109

- 69,512

- 53

- 163

- 191

I got this from here on a x64 bit system: Python 3.7.0b5 (v3.7.0b5:abb8802389, May 31 2018, 01:54:01) [MSC v.1913 64 bit (AMD64)] on win32

- 443

- 2

- 5

- 15

-

6This would be a great answer if you expanded a bit on the details and how others could find their own limit. – shayaan Nov 18 '18 at 22:54

I'd say you're only limited by the total amount of RAM available. Obviously the larger the array the longer operations on it will take.

- 10,861

- 13

- 46

- 72

-

5Generally true, but not all of them -- appending remains amortized constant time independent of the size of the array. – cdleary May 13 '09 at 00:25

There is no limitation of list number. The main reason which causes your error is the RAM. Please upgrade your memory size.

-

14-1 because it does not actually answer the question, and is actually misleading because (as shown by other answers) list does indeed have a maximum size. – ClydeTheGhost Aug 08 '16 at 18:48