Approximate Nearest neighbor search (ANNS), also known as proximity search, similarity search or closest point search, is an optimization problem for finding closest points in metric spaces.

The interest for ANN search is that exact NNS can become very costly in terms of time of execution, when the dataset is big (number of points and especially dimension (curse of dimensionality)).

As a result, we accept the trade-off between speed and accuracy. The more speed, the less accuracy. In other words, we may find the exact NN, by performing a relatively fast search.

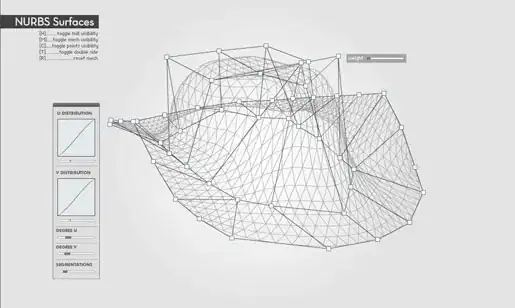

For example, in the picture below:

the point marked with an asterisk is the exact NN, but the ANN search may return as the point with the number 2, which is not the actual closest point to the query, but an approximation.

For more, visit wikipedia-ANN.