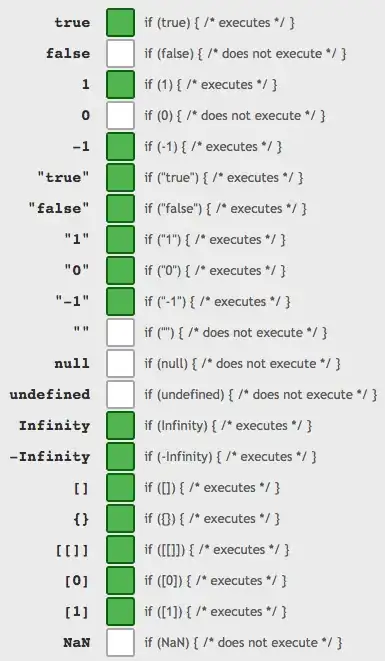

The reason your image can be represented in a contour plot is that it is clearly a pseudocolor image, that is, an image that uses the full RGB color spectrum to represent a single variable. Contour plots also represent data that have a single variable that determines the color (i.e., the Z axis), and therefore you can probably represent your image data as a contour plot as well.

This is the reason I suggested that you use a contour plot in the first place. (What you're actually asking for in this question, though, generally does not exist: there is no generally valid way to convert a color image into a contour plot, since a color image in general has three independent colors, RGB, and a contour plot has only one (the Z-axis), i.e., this only works for pseudocolor images.)

To specifically solve your problem:

1) If you have the z-axis data that's used to create the pseudocolor image that you show, just use this data in the contour plot. This is the best solution.

2) If you don't have the z-data, it's more of a hassle, since you need to invert the colors in the image to a z-value, and then put this into the contour plot. The image you show is almost certainly using the colormap matplotlib.cm.jet, and I can't see a better way to invert it than unubtu says here.

In the end, you will need to understand the difference between a contour plot and an image to get the details to work.

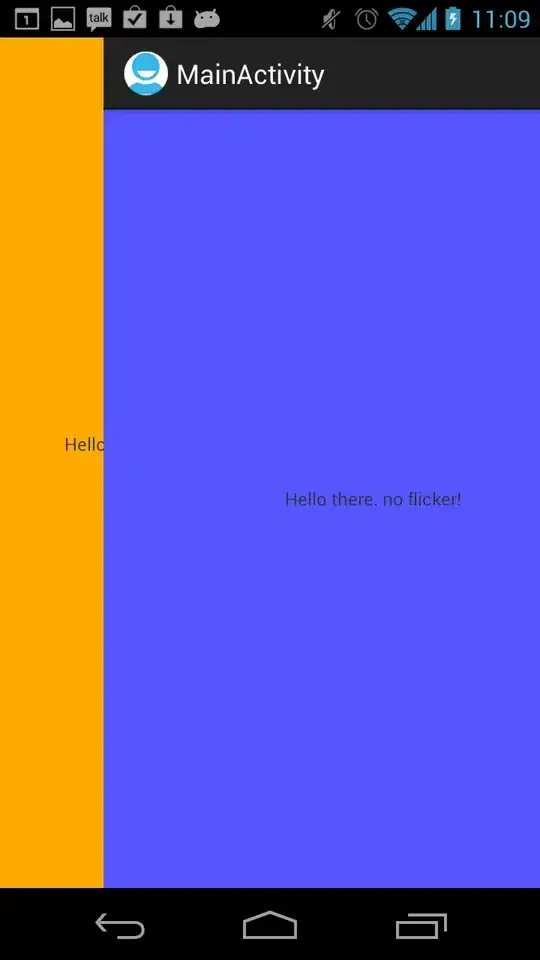

demo of why convert doesn't work:

Here I run through a full test case using a ramp of z-values from left to right. As is clear, the z-values are now totally messed up because the values that were the largest are now the smallest, etc.

That is, the goal is that fig. 2 matches fig. 4, but they are very different. The problem, of course, is that convert doesn't correctly map jet to the original set of z-values.

import numpy as np

import matplotlib.pyplot as plt

import Image

fig, axs = plt.subplots(4,1)

x = np.repeat(np.linspace(0, 1, 100)[np.newaxis,:], 20, axis=0)

axs[0].imshow(x, cmap=plt.cm.gray)

axs[0].set_title('1: original z-values as grayscale')

d = axs[1].imshow(x, cmap=plt.cm.jet)

axs[1].set_title('2:original z-values as jet')

d.write_png('temp01.png') # write to a file

im = Image.open('temp01.png').convert('L') # use 'convert' on image to get grayscale

data = np.asarray(im) # make image into numpy data

axs[2].imshow(data, cmap=plt.cm.gray)

axs[2].set_title("3: 'convert' applied to jet image")

img = Image.open('temp01.png').convert('L')

z = np.asarray(img)

mydata = z[::1,::1] # I don't know what this is here for

axs[3].imshow(mydata,interpolation='nearest',cmap=plt.cm.jet)

axs[3].set_title("4: the code that Jake French suggests")

plt.show()

But, it's not so hard to do this correctly, as I suggest above.