I have run a benchmark on different data structures very recently at my company so I feel I need to drop a word. It is very complicated to benchmark something correctly.

Benchmarking

On the web, we rarely find (if ever) a well-engineered benchmark. Until today I only found benchmarks that were done the journalist way (pretty quickly and sweeping dozens of variables under the carpet).

1) You need to consider cache warming

Most people running benchmarks are afraid of timer discrepancy, therefore they run their stuff thousands of times and take the whole time, they just are careful to take the same thousand of times for every operation, and then consider that comparable.

The truth is, in the real world it makes little sense, because your cache will not be warm, and your operation will likely be called just once. Therefore you need to benchmark using RDTSC, and time stuff calling them once only.

Intel has made a paper describing how to use RDTSC (using a cpuid instruction to flush the pipeline, and calling it at least 3 times at the beginning of the program to stabilize it).

2) RDTSC accuracy measure

I also recommend doing this:

u64 g_correctionFactor; // number of clocks to offset after each measurement to remove the overhead of the measurer itself.

u64 g_accuracy;

static u64 const errormeasure = ~((u64)0);

#ifdef _MSC_VER

#pragma intrinsic(__rdtsc)

inline u64 GetRDTSC()

{

int a[4];

__cpuid(a, 0x80000000); // flush OOO instruction pipeline

return __rdtsc();

}

inline void WarmupRDTSC()

{

int a[4];

__cpuid(a, 0x80000000); // warmup cpuid.

__cpuid(a, 0x80000000);

__cpuid(a, 0x80000000);

// measure the measurer overhead with the measurer (crazy he..)

u64 minDiff = LLONG_MAX;

u64 maxDiff = 0; // this is going to help calculate our PRECISION ERROR MARGIN

for (int i = 0; i < 80; ++i)

{

u64 tick1 = GetRDTSC();

u64 tick2 = GetRDTSC();

minDiff = std::min(minDiff, tick2 - tick1); // make many takes, take the smallest that ever come.

maxDiff = std::max(maxDiff, tick2 - tick1);

}

g_correctionFactor = minDiff;

printf("Correction factor %llu clocks\n", g_correctionFactor);

g_accuracy = maxDiff - minDiff;

printf("Measurement Accuracy (in clocks) : %llu\n", g_accuracy);

}

#endif

This is a discrepancy measurer, and it will take the minimum of all measured values, to avoid getting a -10**18 (64 bits first negatives values) from time to time.

Notice the use of intrinsics and not inline assembly. First inline assembly is rarely supported by compilers nowadays, but much worse of all, the compiler creates a full ordering barrier around inline assembly because it cannot static analyze the inside, so this is a problem to benchmark real-world stuff, especially when calling stuff just once. So an intrinsic is suited here because it doesn't break the compiler free-re-ordering of instructions.

3) parameters

The last problem is people usually test for too few variations of the scenario.

A container performance is affected by:

- Allocator

- size of the contained type

- cost of implementation of the copy operation, assignment operation, move operation, construction operation, of the contained type.

- number of elements in the container (size of the problem)

- type has trivial 3.-operations

- type is POD

Point 1 is important because containers do allocate from time to time, and it matters a lot if they allocate using the CRT "new" or some user-defined operation, like pool allocation or freelist or other...

(for people interested about pt 1, join the mystery thread on gamedev about system allocator performance impact)

Point 2 is because some containers (say A) will lose time copying stuff around, and the bigger the type the bigger the overhead. The problem is that when comparing to another container B, A may win over B for small types, and lose for larger types.

Point 3 is the same as point 2, except it multiplies the cost by some weighting factor.

Point 4 is a question of big O mixed with cache issues. Some bad-complexity containers can largely outperform low-complexity containers for a small number of types (like map vs. vector, because their cache locality is good, but map fragments the memory). And then at some crossing point, they will lose, because the contained overall size starts to "leak" to main memory and cause cache misses, that plus the fact that the asymptotic complexity can start to be felt.

Point 5 is about compilers being able to elide stuff that are empty or trivial at compile time. This can optimize greatly some operations because the containers are templated, therefore each type will have its own performance profile.

Point 6 same as point 5, PODs can benefit from the fact that copy construction is just a memcpy, and some containers can have a specific implementation for these cases, using partial template specializations, or SFINAE to select algorithms according to traits of T.

About the flat map

Apparently, the flat map is a sorted vector wrapper, like Loki AssocVector, but with some supplementary modernizations coming with C++11, exploiting move semantics to accelerate insert and delete of single elements.

This is still an ordered container. Most people usually don't need the ordering part, therefore the existence of unordered...

Have you considered that maybe you need a flat_unorderedmap? which would be something like google::sparse_map or something like that—an open address hash map.

The problem of open address hash maps is that at the time of rehash they have to copy everything around to the new extended flat land, whereas a standard unordered map just has to recreate the hash index, while the allocated data stays where it is. The disadvantage of course is that the memory is fragmented like hell.

The criterion of a rehash in an open address hash map is when the capacity exceeds the size of the bucket vector multiplied by the load factor.

A typical load factor is 0.8; therefore, you need to care about that, if you can pre-size your hash map before filling it, always pre-size to: intended_filling * (1/0.8) + epsilon this will give you a guarantee of never having to spuriously rehash and recopy everything during filling.

The advantage of closed address maps (std::unordered..) is that you don't have to care about those parameters.

But the boost::flat_map is an ordered vector; therefore, it will always have a log(N) asymptotic complexity, which is less good than the open address hash map (amortized constant time). You should consider that as well.

Benchmark results

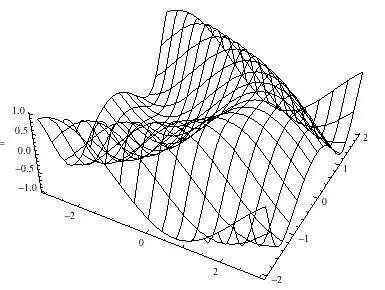

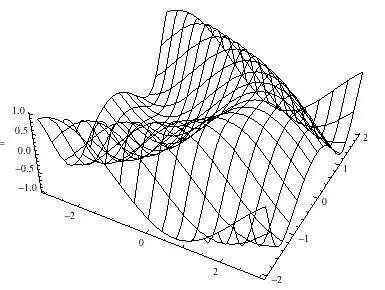

This is a test involving different maps (with int key and __int64/somestruct as value) and std::vector.

tested types information:

typeid=__int64 . sizeof=8 . ispod=yes

typeid=struct MediumTypePod . sizeof=184 . ispod=yes

Insertion

EDIT:

My previous results included a bug: they actually tested ordered insertion, which exhibited a very fast behavior for the flat maps.

I left those results later down this page because they are interesting.

This is the correct test:

I have checked the implementation, there is no such thing as a deferred sort implemented in the flat maps here. Each insertion sorts on the fly, therefore this benchmark exhibits the asymptotic tendencies:

map: O(N * log(N))

hashmaps: O(N)

vector and flatmaps: O(N * N)

Warning: hereafter the 2 tests for std::map and both flat_maps are buggy and actually test ordered insertion (vs random insertion for other containers. yes it's confusing sorry):

We can see that ordered insertion, results in back pushing, and is extremely fast. However, from the non-charted results of my benchmark, I can also say that this is not near the absolute optimality for a back-insertion. At 10k elements, perfect back-insertion optimality is obtained on a pre-reserved vector. Which gives us 3Million cycles; we observe 4.8M here for the ordered insertion into the flat_map (therefore 160% of the optimal).

Analysis: remember this is 'random insert' for the vector, so the massive 1 billion cycles come from having to shift half (in average) the data upward (one element by one element) at each insertion.

Analysis: remember this is 'random insert' for the vector, so the massive 1 billion cycles come from having to shift half (in average) the data upward (one element by one element) at each insertion.

Random search of 3 elements (clocks renormalized to 1)

in size = 100

in size = 10000

Iteration

over size 100 (only MediumPod type)

over size 10000 (only MediumPod type)

Final grain of salt

In the end, I wanted to come back on "Benchmarking §3 Pt1" (the system allocator). In a recent experiment, I am doing around the performance of an open address hash map I developed, I measured a performance gap of more than 3000% between Windows 7 and Windows 8 on some std::unordered_map use cases (discussed here).

This makes me want to warn the reader about the above results (they were made on Win7): your mileage may vary.

Analysis: remember this is 'random insert' for the vector, so the massive 1 billion cycles come from having to shift half (in average) the data upward (one element by one element) at each insertion.

Analysis: remember this is 'random insert' for the vector, so the massive 1 billion cycles come from having to shift half (in average) the data upward (one element by one element) at each insertion.