Chebyshev polynomials, as mentioned in another answer, are the polynomials where the largest difference between the function and the polynomial is as small as possible. That is an excellent start.

In some cases, the maximum error is not what you are interested in, but the maximum relative error. For example for the sine function, the error near x = 0 should be much smaller than for larger values; you want a small relative error. So you would calculate the Chebyshev polynomial for sin x / x, and multiply that polynomial by x.

Next you have to figure out how to evaluate the polynomial. You want to evaluate it in such a way that the intermediate values are small and therefore rounding errors are small. Otherwise the rounding errors might become a lot larger than errors in the polynomial. And with functions like the sine function, if you are careless then it may be possible that the result that you calculate for sin x is greater than the result for sin y even when x < y. So careful choice of the calculation order and calculation of upper bounds for the rounding error are needed.

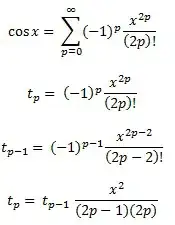

For example, sin x = x - x^3/6 + x^5 / 120 - x^7 / 5040... If you calculate naively sin x = x * (1 - x^2/6 + x^4/120 - x^6/5040...), then that function in parentheses is decreasing, and it will happen that if y is the next larger number to x, then sometimes sin y will be smaller than sin x. Instead, calculate sin x = x - x^3 * (1/6 - x^2 / 120 + x^4/5040...) where this cannot happen.

When calculating Chebyshev polynomials, you usually need to round the coefficients to double precision, for example. But while a Chebyshev polynomial is optimal, the Chebyshev polynomial with coefficients rounded to double precision is not the optimal polynomial with double precision coefficients!

For example for sin (x), where you need coefficients for x, x^3, x^5, x^7 etc. you do the following: Calculate the best approximation of sin x with a polynomial (ax + bx^3 + cx^5 + dx^7) with higher than double precision, then round a to double precision, giving A. The difference between a and A would be quite large. Now calculate the best approximation of (sin x - Ax) with a polynomial (b x^3 + cx^5 + dx^7). You get different coefficients, because they adapt to the difference between a and A. Round b to double precision B. Then approximate (sin x - Ax - Bx^3) with a polynomial cx^5 + dx^7 and so on. You will get a polynomial that is almost as good as the original Chebyshev polynomial, but much better than Chebyshev rounded to double precision.

Next you should take into account the rounding errors in the choice of polynomial. You found a polynomial with minimum error in the polynomial ignoring rounding error, but you want to optimise polynomial plus rounding error. Once you have the Chebyshev polynomial, you can calculate bounds for the rounding error. Say f (x) is your function, P (x) is the polynomial, and E (x) is the rounding error. You don't want to optimise | f (x) - P (x) |, you want to optimise | f (x) - P (x) +/- E (x) |. You will get a slightly different polynomial that tries to keep the polynomial errors down where the rounding error is large, and relaxes the polynomial errors a bit where the rounding error is small.

All this will get you easily rounding errors of at most 0.55 times the last bit, where +,-,*,/ have rounding errors of at most 0.50 times the last bit.