Most of the Spam in Google Analytics never access your site so you can't block them using any server-side solution.

Ghost Spam hits directly GA and usually shows up only for a few days and then disappear, that's why some people think they blocked them from the .htaccess file but is just coincidence.

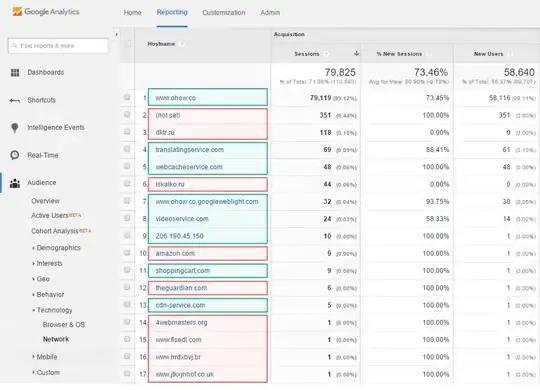

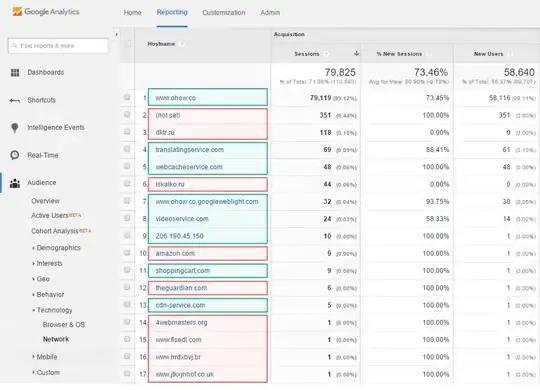

This type of Spam is easy to spot since they use either a fake hostname or is not set. (See image below)

The other type, Crawlers like semalt, actually access your site and can be blocked from the .htaccess file, however, there are just a few of them.

So in summary, to stop spam in Google Analytics:

- Crawlers: server-side solutions or filters in GA

- Ghosts: ONLY filters in GA

The only efficient solution to prevent being hit by ghost spam is by making an include filter with all your valid hostnames.

First you need to make a REGEX with all the valid hostnames, something like this (you can find them on the network report)

yoursite\.com|shoppingcart\.com|translateservice\.net

These are some examples; you might have more or fewer hostnames. Once you have the REGEX, follow the same steps as above and change this:

- Go to the admin tab in Google Analytics

- Select FILTER under the View Column > New Filter

- Filter type Custom > Include > Filter Field Hostname

- File Pattern Copy the hostname expression you built

For Crawlers you will have to create a different filter building an expression with all spammers

spammer1|spammer2|spammer3|spammer4|spammer5

- Filter type Custom > Exclude > Filter Field Campaign source

- File Pattern Copy the referral expression

Everytime you work with filters it is important that you keep an unfiltered view.

If you need detailed steps for this solutions you can check this complete guide about Spam in Google Analytics.

Guide to stop and remove All the spam in Google Analytics

Hope it helps.

Hostname report Example