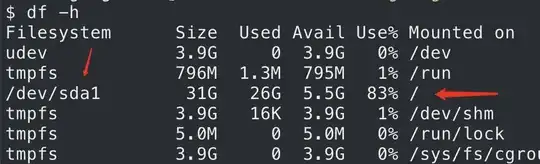

The input split is a logical chunk of the file stored on hdfs , by default an input split represents a block of a file where the blocks of the file might be stored on many data nodes in the cluster.

A container is a task execution template allocated by the Resource Manager on any of the data node in order to execute the Map/Reduce tasks.

First the Map tasks gets executed by the containers on data node where the container was allocated by the Resource Manager as near as possible to the Input Split's location by adhering to the Rack Awareness Policy (Local/Rack Local/DC Local).

The Reduce tasks will be executed by any random containers on any data nodes, and the reducers copies its relevant the data from every mappers by the Shuffle/Sort process.

The mappers prepares the results in such a way the results are internally partitioned and within each partition the records are sorted by the key and the partitioner determines which reducer should fetch the partitioned data.

By Shuffle and Sort, the Reducers copies their relevant partitions from every mappers output through http, eventually every reducer Merge&Sort the copied partitions and prepares the final single Sorted file before the reduce() method invoked.

The below image may give more clarifiations.

[Imagesrc:http://www.ibm.com/developerworks/cloud/library/cl-openstack-deployhadoop/]