I'm currently using the opencv (CV2) and Python Pillow image library to try and take an image of arbitrary phones and replace the screen with a new image. I've gotten to the point where I can take an image and identify the screen of the phone and get all the coordinates for the corner, but I'm having a really hard time replacing that area in the image with a new image.

The code I have so far:

import cv2

from PIL import Image

image = cv2.imread('mockup.png')

edged_image = cv2.Canny(image, 30, 200)

(contours, _) = cv2.findContours(edged_image.copy(), cv2.RETR_TREE, cv2.CHAIN_APPROX_SIMPLE)

contours = sorted(contours, key = cv2.contourArea, reverse = True)[:10]

screenCnt = None

for contour in contours:

peri = cv2.arcLength(contour, True)

approx = cv2.approxPolyDP(contour, 0.02 * peri, True)

# if our approximated contour has four points, then

# we can assume that we have found our screen

if len(approx) == 4:

screenCnt = approx

break

cv2.drawContours(image, [screenCnt], -1, (0, 255, 0), 3)

cv2.imshow("Screen Location", image)

cv2.waitKey(0)

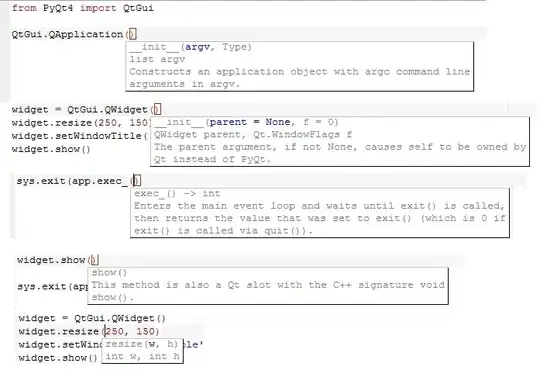

This will give me an image that looks like this:

I can also get the coordinates of the screen corners using this line of code:

screenCoords = [x[0].tolist() for x in screenCnt]

// [[398, 139], [245, 258], [474, 487], [628, 358]]

However I can't figure out for the life of me how to take a new image and scale it into the shape of the coordinate space I've found and overlay the image ontop.

My guess is that I can do this using an image transform in Pillow using this function that I adapted from this stackoverflow question:

def find_transform_coefficients(pa, pb):

"""Return the coefficients required for a transform from start_points to end_points.

args:

start_points -> Tuple of 4 values for start coordinates

end_points --> Tuple of 4 values for end coordinates

"""

matrix = []

for p1, p2 in zip(pa, pb):

matrix.append([p1[0], p1[1], 1, 0, 0, 0, -p2[0]*p1[0], -p2[0]*p1[1]])

matrix.append([0, 0, 0, p1[0], p1[1], 1, -p2[1]*p1[0], -p2[1]*p1[1]])

A = numpy.matrix(matrix, dtype=numpy.float)

B = numpy.array(pb).reshape(8)

res = numpy.dot(numpy.linalg.inv(A.T * A) * A.T, B)

return numpy.array(res).reshape(8)

However I'm in over my head a bit, and I can't get the details right. Could someone give me some help?

EDIT

Ok now that I'm using the getPerspectiveTransform and warpPerspective functions, I've got the following additional code:

screenCoords = numpy.asarray(

[numpy.asarray(x[0], dtype=numpy.float32) for x in screenCnt],

dtype=numpy.float32

)

overlay_image = cv2.imread('123.png')

overlay_height, overlay_width = image.shape[:2]

input_coordinates = numpy.asarray(

[

numpy.asarray([0, 0], dtype=numpy.float32),

numpy.asarray([overlay_width, 0], dtype=numpy.float32),

numpy.asarray([overlay_width, overlay_height], dtype=numpy.float32),

numpy.asarray([0, overlay_height], dtype=numpy.float32)

],

dtype=numpy.float32,

)

transformation_matrix = cv2.getPerspectiveTransform(

numpy.asarray(input_coordinates),

numpy.asarray(screenCoords),

)

warped_image = cv2.warpPerspective(

overlay_image,

transformation_matrix,

(background_width, background_height),

)

cv2.imshow("Overlay image", warped_image)

cv2.waitKey(0)

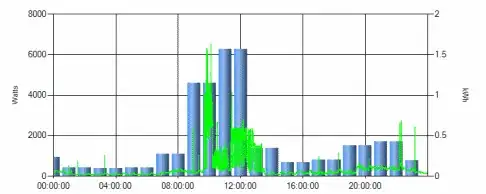

The image is getting rotated and skewed properly (I think), but its not the same size as the screen. Its "shorter":

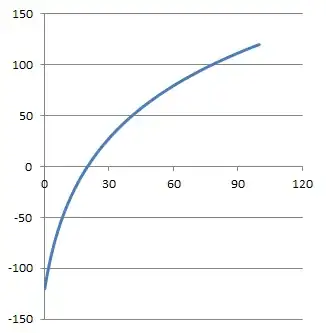

and if I use a different image that is very tall vertically I end up with something that is too "long":

Do I need to apply an additional transformation to scale the image? Not sure whats going on here, I thought the perspective transform would make the image automatically scale out to the provided coordinates.