I am trying to detect all the squared shaped dice images so that i can crop them individually and use that for OCR. Below is the Original image:

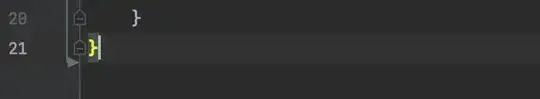

Here is the code i have got but it is missing some squares.

def find_squares(img):

img = cv2.GaussianBlur(img, (5, 5), 0)

squares = []

for gray in cv2.split(img):

for thrs in range(0, 255, 26):

if thrs == 0:

bin = cv2.Canny(gray, 0, 50, apertureSize=5)

bin = cv2.dilate(bin, None)

else:

_retval, bin = cv2.threshold(gray, thrs, 255, cv2.THRESH_BINARY)

bin, contours, _hierarchy = cv2.findContours(bin, cv2.RETR_LIST, cv2.CHAIN_APPROX_SIMPLE)

for cnt in contours:

cnt_len = cv2.arcLength(cnt, True)

cnt = cv2.approxPolyDP(cnt, 0.02*cnt_len, True)

if len(cnt) == 4 and cv2.contourArea(cnt) > 1000 and cv2.isContourConvex(cnt):

cnt = cnt.reshape(-1, 2)

max_cos = np.max([angle_cos( cnt[i], cnt[(i+1) % 4], cnt[(i+2) % 4] ) for i in range(4)])

#print(cnt)

a = (cnt[1][1] - cnt[0][1])

if max_cos < 0.1 and a < img.shape[0]*0.8:

squares.append(cnt)

return squares

dice = cv2.imread('img1.png')

squares = find_squares(dice)

cv2.drawContours(dice, squares, -1, (0, 255, 0), 3)

As per my analysis, some squares are missing due to missing canny edges along the dice because of smooth intensity transition between dice and background.

Given the constraint that there will always be 25 dices in square grid pattern (5*5) can we predict the missing square positions based on recognised squares? Or can we modify above algorithm for square detection algorithm?