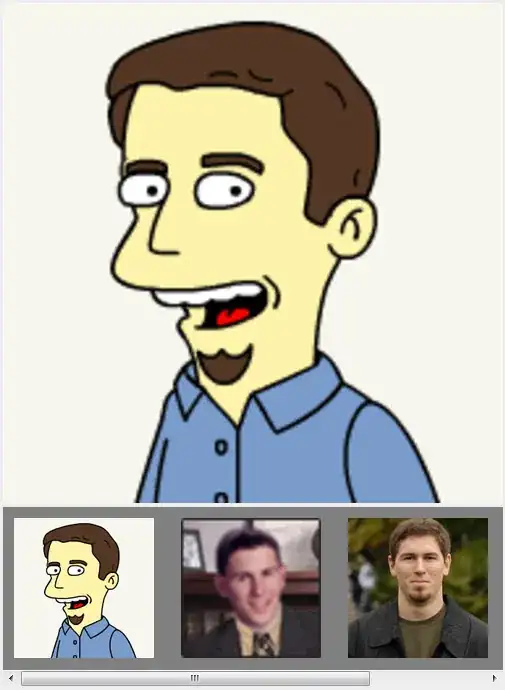

I have an MTKView set to use MTLPixelFormat.rgba16Float. I'm having display issues which can be best described with the following graphic:

So the intended UIColor becomes washed out, but only while it is being displayed in MTKView. When I convert the drawable texture back to an image for display in a UIView via CIIMage, I get back the original color. Here is how I create that output:

let colorSpace = CGColorSpaceCreateDeviceRGB()

let kciOptions = [kCIImageColorSpace: colorSpace,

kCIContextOutputPremultiplied: true,

kCIContextUseSoftwareRenderer: false] as [String : Any]

let strokeCIImage = CIImage(mtlTexture: metalTextureComposite, options: kciOptions)!.oriented(CGImagePropertyOrientation.downMirrored)

let imageCropCG = cicontext.createCGImage(strokeCIImage, from: bbox, format: kCIFormatABGR8, colorSpace: colorSpace)

Other pertinent settings:

uiColorBrushDefault: UIColor = UIColor(red: 0.92, green: 0.79, blue: 0.18, alpha: 1.0)

self.colorPixelFormat = MTLPixelFormat.rgba16Float

renderPipelineDescriptor.colorAttachments[0].pixelFormat = self.colorPixelFormat

// below is the colorspace for the texture which is tinted with UIColor

let colorSpace = CGColorSpaceCreateDeviceRGB()

let texDescriptor = MTLTextureDescriptor.texture2DDescriptor(pixelFormat: MTLPixelFormat.rgba8Unorm, width: Int(width), height: Int(height), mipmapped: isMipmaped)

target = texDescriptor.textureType

texture = device.makeTexture(descriptor: texDescriptor)

Some posts have hinted at sRGB being assumed somewhere, but no specifics as to how I can disable it.

I'd like the color that I display on MTKView to match the input (as close to it as possible anyway) and still be able to convert that texture into something I can display in an ImageView. I've tested this on an iPad Air and a new iPad Pro. Same behavior. Any help would be appreciated.