I have a code that computes the orientation of a figure. Based on this orientation the figure is then rotated until it is straightened out. This all works fine. What I am struggling with, is getting the center of the rotated figure to the center of the whole image. So the center point of the figure should match the center point of the whole image.

code:

import cv2

import numpy as np

import matplotlib.pyplot as plt

path = "inputImage.png"

image=cv2.imread(path)

gray=cv2.cvtColor(image,cv2.COLOR_BGR2GRAY)

thresh=cv2.threshold(gray,0,255,cv2.THRESH_BINARY | cv2.THRESH_OTSU)[1]

contours,hierarchy = cv2.findContours(thresh.copy(), cv2.RETR_EXTERNAL,cv2.CHAIN_APPROX_NONE)

cnt1 = contours[0]

cnt=cv2.convexHull(contours[0])

angle = cv2.minAreaRect(cnt)[-1]

print("Actual angle is:"+str(angle))

rect = cv2.minAreaRect(cnt)

p=np.array(rect[1])

if p[0] < p[1]:

print("Angle along the longer side:"+str(rect[-1] + 180))

act_angle=rect[-1]+180

else:

print("Angle along the longer side:"+str(rect[-1] + 90))

act_angle=rect[-1]+90

#act_angle gives the angle of the minAreaRect with the vertical

if act_angle < 90:

angle = (90 + angle)

print("angleless than -45")

# otherwise, just take the inverse of the angle to make

# it positive

else:

angle=act_angle-180

print("grter than 90")

# rotate the image to deskew it

(h, w) = image.shape[:2]

print(h,w)

center = (w // 2, h // 2)

print(center)

M = cv2.getRotationMatrix2D(center, angle, 1.0)

rotated = cv2.warpAffine(image, M, (w, h),flags=cv2.INTER_CUBIC, borderMode=cv2.BORDER_REPLICATE)

plt.imshow(rotated)

cv2.imwrite("rotated.png", rotated)

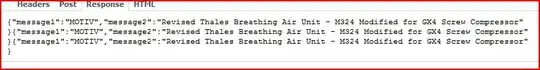

With output:

As you can see the white figure is slightly placed to left, I want it to be perfectly centered. Does anyone know how this can be done?

EDIT: I have tried @joe's suggestion and subtracted the centroid coordinates, from the center of the image by dividing the width and height of the picture by 2. From this I got an offset, this had to be added to the array that describes the image. But I don't know how I add the offset to the array. How would this work with the x and y coordinates?

The code:

img = cv2.imread("inputImage")

gray_image = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)

ret,thresh = cv2.threshold(gray_image,127,255,0)

height, width = gray_image.shape

print(img.shape)

wi=(width/2)

he=(height/2)

print(wi,he)

M = cv2.moments(thresh)

cX = int(M["m10"] / M["m00"])

cY = int(M["m01"] / M["m00"])

offsetX = (wi-cX)

offsetY = (he-cY)

print(offsetX,offsetY)

print(cX,cY)