I want to find the distance of samples to the decision boundary of a trained decision trees classifier in scikit-learn. The features are all numeric and the feature space could be of any size.

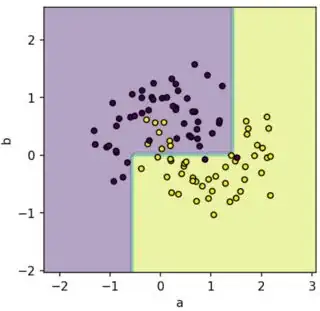

I have this visualization so far for an example 2D case based on here:

import numpy as np

import matplotlib.pyplot as plt

from sklearn.tree import DecisionTreeClassifier

from sklearn.datasets import make_moons

# Generate some example data

X, y = make_moons(noise=0.3, random_state=0)

# Train the classifier

clf = DecisionTreeClassifier(max_depth=2)

clf.fit(X, y)

# Plot

x_min, x_max = X[:, 0].min() - 1, X[:, 0].max() + 1

y_min, y_max = X[:, 1].min() - 1, X[:, 1].max() + 1

xx, yy = np.meshgrid(np.arange(x_min, x_max, 0.1), np.arange(y_min, y_max, 0.1))

Z = clf.predict(np.c_[xx.ravel(), yy.ravel()])

Z = Z.reshape(xx.shape)

plt.contourf(xx, yy, Z, alpha=0.4)

plt.scatter(X[:, 0], X[:, 1], c=y, s=20, edgecolor='k')

plt.xlabel('a'); plt.ylabel('b');

I understand that for some other classifiers like SVM, this distance can be mathematically calculated [1, 2, 3]. The rules learned after training a decision trees define the boundaries and may also be helpful to algorithmically calculate the distances [4, 5, 6]:

# Plot the trained tree

from sklearn import tree

import graphviz

dot_data = tree.export_graphviz(clf, feature_names=['a', 'b'], class_names=['1', '2'], filled=True)

graph = graphviz.Source(dot_data)